IECE Transactions on Emerging Topics in Artificial Intelligence

ISSN: 3066-1676 (Online) | ISSN: 3066-1668 (Print)

Email: [email protected]

Recently, the widespread use of video data has driven the continuous demand for and ongoing development of Multiple Object Tracking (MOT) technology [1]. Multiple Object Tracking is a critical computer vision task aimed at identifying, localizing, and tracking multiple moving objects from video sequences, and inferring their relationships and behaviors. This technology finds extensive applications across various domains, including but not limited to intelligent surveillance, autonomous driving, robot navigation, medical image analysis, and sports analytics [2]. With the continuous improvement of sensor technology and computing power, coupled with the rapid advancement of deep learning methods, the importance and effectiveness of Multiple Object Tracking technology in practical applications are becoming increasingly prominent [3].

Pedestrian target tracking is of significant importance in today's digital age. This technology not only aids in real-time monitoring and tracking of pedestrian activities in intelligent surveillance systems but also finds applications in urban traffic management, intelligent transportation systems, and human-computer interaction fields [4]. In intelligent surveillance systems, pedestrian target tracking helps ensure public safety, monitor access to critical areas, and promptly identify abnormal behaviors. In urban traffic management, it can be utilized to monitor pedestrian movement trajectories and traffic flow, thereby optimizing traffic signal control and enhancing traffic efficiency and safety. Additionally, pedestrian target tracking assists autonomous vehicles in sensing pedestrian positions and dynamics, thus avoiding traffic accidents [4]. In the realm of human-computer interaction, pedestrian target tracking technology provides fundamental support for intelligent services and augmented reality applications, improving user experiences and driving the development and application of intelligent technologies. Hence, pedestrian target tracking technology holds broad prospects and significant societal importance in the digital era [5, 16].

With the advancement of single-object tracking technology, research on multiple-object tracking has gradually branched into two main directions: online tracking and batch tracking. Online tracking primarily consists of two key steps: target detection and Re-identification (ReID) [6]. Target detection involves identifying potential targets in each frame of an image, typically using object detection algorithms such as Convolutional Neural Networks (CNN) or other deep learning models. ReID, on the other hand, is about re-identifying targets across different time steps to ensure consistency in the tracking process. This process often involves steps like feature extraction, feature matching, and similarity measurement to accurately associate targets across different time steps. Batch tracking, meanwhile, adopts a different strategy. It does not update in real-time at each time step but optimizes tracking results by processing the entire video sequence. Batch tracking methods typically utilize spatial and temporal consistency among targets for global optimization, aiming to improve tracking accuracy and stability [7]. In batch tracking, target detection is initially required, similar to online tracking methods. However, instead of being used solely for tracking in the current frame, detected targets are integrated into a global optimization process to maintain consistency across the entire video sequence. This process often involves techniques such as data association and trajectory graph optimization.

However, in the past, most tracking networks have focused on using anchor-based networks for two-step detection and tracking methods to extract object features. However, recent research has found that anchor-based detection networks have better tracking capabilities [7]. During the tracking process, when targets overlap, anchor-based detection networks may encounter difficulties in clearly separating different targets. This ambiguity can lead to tracking algorithms erroneously considering overlapping targets as a single target or having difficulty accurately distinguishing them when targets intersect. Therefore, although anchor-based detection networks can provide excellent tracking performance in many cases, further research and improvements are still needed to enhance the accuracy and robustness of tracking algorithms in scenarios involving overlapping targets [13].

To address these issues, this paper integrates the CT-DETR (Center and Tracked-DETR) framework with JDE (Joint Detection and Embedding) into a single network for object detection and tracking. Targets are detected by identifying their centroids and bounding boxes, followed by target association using ReID (Re-identification) techniques. By leveraging feature-based similarity metrics to link existing trajectories, the proposed method significantly improves tracking accuracy.

This paper proposes an anchor-free detection framework that no longer relies on predefined anchor points but directly detects the centroids and bounding boxes of targets. This new detection framework is more flexible in handling overlapping targets and can more accurately segment different targets, bringing new ideas and methods to the field of multiple object tracking.

The ByteTrack model, which is a video-based multi-object tracking model, is optimized in this paper. By integrating the CT-DERT framework and JDE model, ByteTrack is further improved to enhance its tracking accuracy and efficiency, making it more suitable for multi-object tracking tasks in real-world scenarios.

Extensive experiments have been conducted to validate the effectiveness of the proposed methods. The experimental results confirm their effectiveness and practicality, providing valuable references for both research and real-world applications in the field of multiple object tracking.

The structure of this paper comprises several key sections: related work, methodology, experimental results, and conclusion. In the related work section, we investigated existing literature and methodologies in pedestrian target tracking. The methodology section outlines our proposed approach, including the implementation of the tracking algorithm and any other innovations. Subsequently, the experimental section introduces the results and evaluation of our approach. Finally, the conclusion summarizes the research findings and discusses implications for future research and applications.

In the realm of multi-object tracking, target detection techniques play a pivotal role. These techniques serve as the foundation for identifying and localizing objects within a scene, a prerequisite for subsequent tracking processes [28, 31]. Notably, the emergence of deep learning architectures has significantly advanced the state-of-the-art in target detection. Among these architectures, YOLO (You Only Look Once) models [29, 30] have gained considerable attention for their real-time performance and accuracy. Noteworthy variants such as YOLOv5, YOLOv8, and YOLOv9 have been introduced, each refining the original architecture to achieve better performance in terms of speed, accuracy, or both.

Beyond YOLO models, recent advancements have been made in transformer-based object detection and tracking methods. One notable approach is DETR (DEtection TRansformer) [32], which leverages transformer architectures to directly predict object bounding boxes and categories in an end-to-end manner. Variants such as Deformable DETR, PnP-DETR, and RT-DETR have further extended the capabilities of transformer-based approaches, addressing shortcomings such as scalability, robustness to occlusions, and handling deformable objects [33].

Each of these algorithms has its strengths and limitations. YOLO models excel in real-time performance and simplicity but may sacrifice fine-grained localization accuracy compared to slower, two-stage detectors [8]. Transformer-based approaches offer end-to-end learning capabilities and global context modeling, but they may suffer from increased computational complexity and training data requirements. Understanding these trade-offs is crucial for selecting the most suitable algorithm for specific tracking tasks.

In the current landscape of multi-object tracking algorithms, several notable approaches have emerged, each with its strengths and limitations. SORT (Simple Online and Realtime Tracking) is a popular method known for its simplicity and efficiency [26, 10]. It operates on a tracking-by-detection framework, associating object detections across frames based on motion models and appearance features. SORT is renowned for its real-time performance and effectiveness in tracking multiple objects simultaneously. However, its performance may degrade in scenarios with heavy occlusions or complex interactions between objects due to its reliance on simple motion and appearance models. DeepSORT [27] (Deep Simple Online and Realtime Tracking) builds upon SORT by integrating deep learning techniques for improved object appearance modeling and feature extraction. By leveraging deep neural networks, DeepSORT achieves better robustness to occlusions and appearance variations, resulting in more accurate and reliable tracking performance compared to traditional SORT. However, DeepSORT may suffer from higher computational costs and resource requirements due to the complexity of deep learning models. MOTDT (Multiple Object Tracking with Deep Learning and Tracklet) is another notable algorithm that combines deep learning methods with tracklets, short track segments, to improve tracking performance. MOTDT excels in handling complex scenarios with occlusions and crowded environments by leveraging deep feature representations and tracklet association techniques. However, its performance may be affected by issues such as track fragmentation and identity switches in highly dynamic scenes [14].

JDE (Joint Detection and Embedding) integrates object detection and feature embedding into a unified framework for multi-object tracking. By jointly optimizing detection and embedding tasks, JDE achieves superior performance in associating object detections and maintaining object identities over time. This approach benefits from end-to-end learning and feature embedding, resulting in robust tracking performance across various challenging scenarios. However, JDE may suffer from increased computational complexity and training data requirements compared to simpler tracking methods.

Bytetrack is a recent addition to the multi-object tracking landscape, leveraging advanced techniques such as deep learning, attention mechanisms, and graph neural networks. Bytetrack aims to address the limitations of existing methods by incorporating contextual information and global dependencies into the tracking process, leading to improved robustness and accuracy. However, its effectiveness in real-world scenarios and computational efficiency relative to traditional methods remain areas of ongoing research and evaluation [15].

Overall, while each of these algorithms offers distinct advantages in multi-object tracking, they also exhibit specific limitations that need to be addressed for more comprehensive and effective tracking solutions [20, 21, 22]. The progression from simpler methods like SORT to more advanced approaches like Bytetrack reflects the continuous evolution and refinement of multi-object tracking techniques in response to the challenges posed by complex real-world scenarios [7, 9, 23].

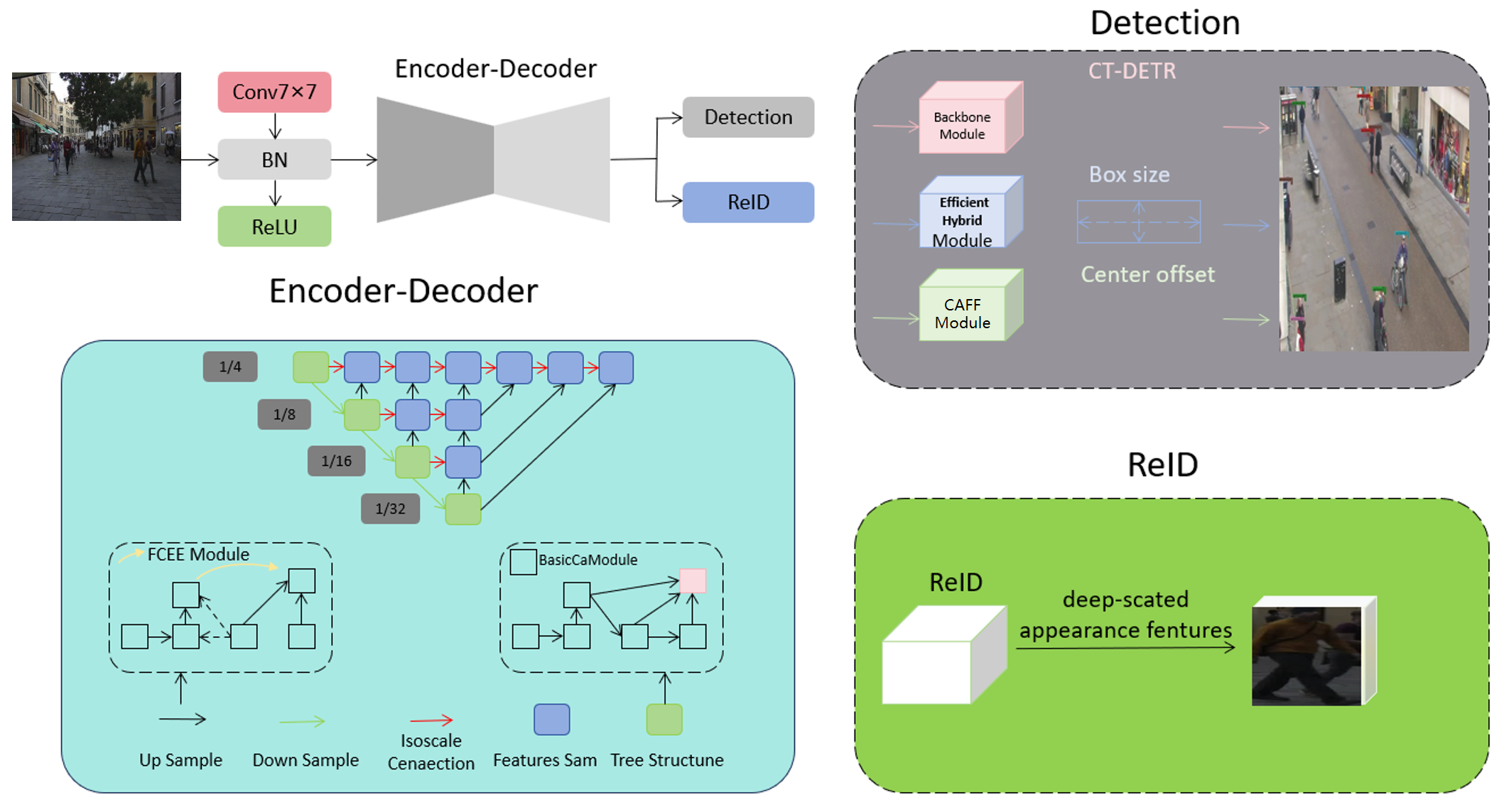

Our overall method framework is illustrated in Figure 1 and consists primarily of two components: the detection module and the re-identification (ReID) module [11, 12]. Firstly, the detection module employs the CT-DETR model for pedestrian target detection. The CT-DETR model, based on Transformer architecture, accurately detects pedestrian targets in images and videos with high efficiency, making it suitable for handling large-scale real-time surveillance scenarios.

Secondly, the ReID module addresses the pedestrian target re-identification task. In this module, we adopt a deep learning approach that comprehensively utilizes both semantic [25] and spatial information, along with a fusion technique for shallow and deep features. Through these methods, our network can capture pedestrian target features more comprehensively, thereby enhancing re-identification accuracy and robustness.

In summary, our approach combines the detection and reID modules, utilizing advanced models and techniques to achieve efficient detection and accurate re-identification of pedestrian targets. This provides strong support for applications in real-time surveillance systems and intelligent transportation systems, among others.

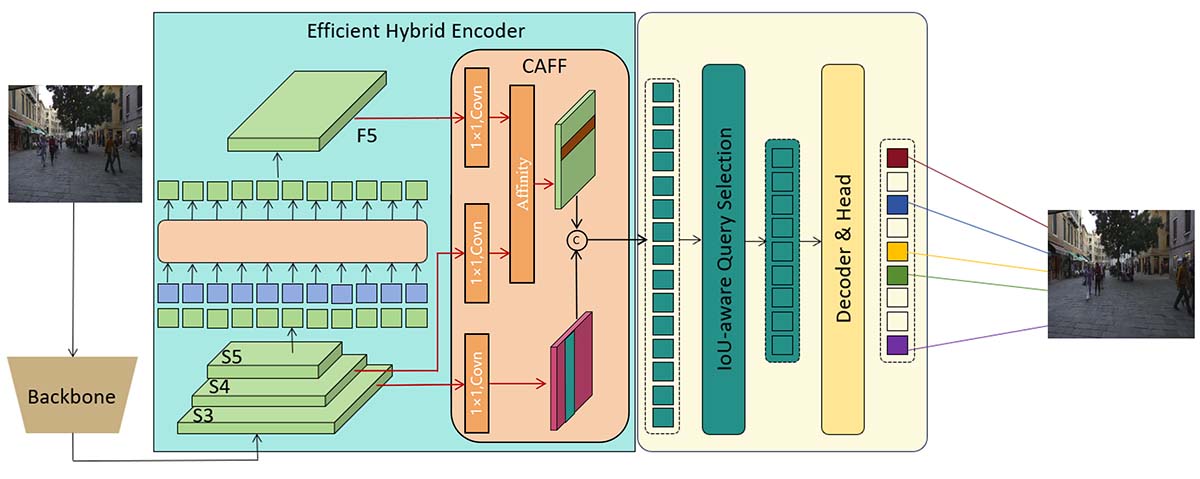

The complete architecture of the CT-DETR model comprises a backbone network, a hybrid encoder, and a transformer decoder with auxiliary prediction heads. The backbone network serves as the foundational component, responsible for extracting feature representations of input images, utilizing a pre-trained EfficientNet. The hybrid encoder, on the other hand, undertakes the task of feature encoding, converting the feature maps extracted by the backbone network into more expressive and semantically understandable feature representations. It consists of a series of Transformer encoders, encoding feature vectors into high-dimensional representations through self-attention mechanisms and fully connected layers. The transformer decoder with auxiliary prediction heads is a crucial component responsible for translating the encoded feature maps into the results of target detection. It consists of multiple layers of Transformer decoders, each layer containing self-attention mechanisms and cross-attention mechanisms. The auxiliary prediction heads provide additional supervisory signals, contributing to the convergence and stability of model training. By integrating these components, the CT-DETR model achieves efficient detection and re-identification of targets in complex scenes. The network structure is illustrated in Figure 2, where initially, features from the last three stages of the backbone network S3, S4, S5 are utilized as the input to the encoder. The efficient hybrid encoder transforms multi-scale features into a sequence of image features through Scale-Invariant Feature Interaction (AIFI) and Cross-Attention Feature Fusion Module (CAFF).

The proposed encoder comprises two modules: the Attention-based Intra-scale Feature Interaction (AIFI) module and the Convolutional Neural Network-based Cross-Attention Feature Fusion (CAFF) [17]. Building upon variant D, AIFI further reduces computational redundancy by confining intra-scale interactions solely to S5. We contend that applying self-attention operations to higher-level features with richer semantic concepts enables capturing relationships among conceptual entities in images, thereby facilitating subsequent modules in object detection and recognition.

Specifically, the fusion block consists of N RepBlocks, and the outputs of the two paths are fused via element-wise addition. We can describe this process as follows:

where stands for multi-head self-attention, while is used to restore the shape of features to match that of S5, serving as the inverse operation of .

We propose the method of IoU-aware Query Selection, wherein during training, the model is constrained to generate high classification scores for features with high IoU scores, and low classification scores for features with low IoU scores. This approach helps the model to more effectively utilize IoU information in the object detection task, thereby improving the accuracy of object localization and classification. Specifically, through IoU-aware Query Selection, the model can focus more on features closely related to the target, while appropriately downweighting features with lower relevance to the target, thus effectively enhancing the performance of object detection. This mechanism introduces a finer IoU-aware adjustment during training, enabling the model to intelligently handle object detection tasks in various scenarios, thereby improving its robustness and generalization capability. We redefine the optimization objective of the detector as follows:

where and represent predicted and true values, stands for class and for bounding box. We introduce the IoU score into the classification branch's objective function, akin to VFL, to ensure consistency in positive sample classification and localization.

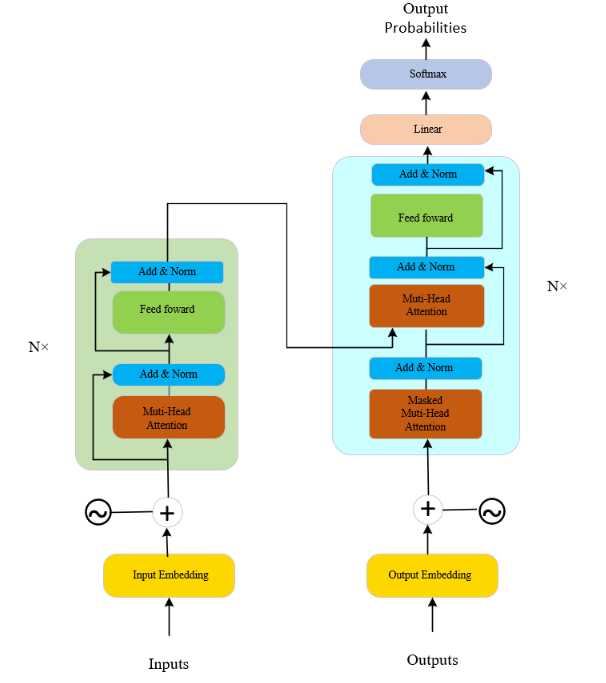

In our CT-DETR model, the Transformer plays a crucial role. This architecture enables the model to effectively capture long-distance dependencies within the image [18, 19, 24], whether they are spatial or temporal. By simultaneously calculating attention scores for each position in the image relative to every other position, the Transformer endows the backbone network of CT-DETR with a powerful global understanding capability. This not only increases the model's flexibility in processing input sequences of varying lengths but also significantly boosts the efficiency of parallel computations, thereby accelerating training speed. Most importantly, the introduction of the Transformer significantly enhances the model's ability to recognize key features within images, providing strong support for achieving high-precision object detection and identification. The network architecture diagram of the Transformer is shown in Figure 3.

where is the matrix of queries, is the input representation (e.g., word embeddings), and is the weight matrix for queries.

where is the matrix of keys, is the input representation, and is the weight matrix for keys.

where is the matrix of values, is the input representation, and is the weight matrix for values.

where represents the attention scores matrix, is the dot product of queries and keys transpose, and is the scaling factor, with being the dimension of the key vectors.

where is the softmax-normalized attention scores, ensuring all the attention scores are positive and sum up to 1.

where is the output matrix of the self-attention mechanism, and is the matrix of values.

where Output is the final output of the self-attention layer, is the output matrix from the self-attention mechanism, and is the output weight matrix.

When constructing the loss function, we first calculate the loss between the predicted heatmap and the actual heatmap. This loss calculation is based on the discrepancy between the two, aiming to measure the deviation between the predicted results and the ground truth. Then, we use a Gaussian distribution to map the heatmap onto the heatmap, which helps to more accurately localize the keypoints in the heatmap. Finally, based on the difference between the mapped heatmap and the actual heatmap, we construct the loss function to guide the optimization of model parameters, thereby improving the model's prediction accuracy and precision regarding the target.We use the following equation to describe the content above.

where denotes the value of the actual heatmap at pixel location , generated by applying a Gaussian distribution centered on the keypoint. This reflects the probability of the pixel being the keypoint location. represents the predicted heatmap value at pixel location , indicating the model's estimation of the keypoint likelihood at that pixel. and are the predicted coordinates of the keypoint, serving as the center of the Gaussian distribution applied to generate the actual heatmap. is the standard deviation of the Gaussian distribution, controlling the spread of the heatmap around the keypoint and hence influencing the localization sensitivity. is the total number of pixels in the heatmap, used to normalize the loss over all pixels. and are hyperparameters that adjust the emphasis on different aspects of the loss, specifically focusing on penalizing false negatives and false positives respectively.

The final loss function is shown as follows:

Pedestrian re-identification (ReID, Reidentification) is a specific computer vision task aimed at recognizing and tracking the appearance of the same pedestrian across a network of cameras [20, 21]. This capability is crucial for fields such as video surveillance, human traffic analysis, and social security. Pedestrian Re-ID systems work by extracting and comparing the appearance features of pedestrians from different video frames or different camera angles. These appearance features include, but are not limited to, clothing color, style, body posture, and gait. The detailed information in this study is shown in the equations.

where is the expected probability distribution for the identity of the target, where the number of categories is denoted by . represents the actual probability distribution for the identity of the object.

Our experiment makes use of two prominent datasets for evaluating object tracking algorithms: 2DMOT2015 and OTB-100. The 2DMOT2015 dataset serves as a comprehensive benchmark for multiple object tracking, offering a diverse array of challenging sequences derived from real-world scenarios [34, 35]. These sequences are annotated with ground truth data, including object positions and identities, enabling rigorous evaluation of tracking algorithms' performance under various conditions such as occlusions, scale variations, and object interactions. On the other hand, the OTB-100 dataset, also known as Object Tracking Benchmark-100, is specifically designed for single object tracking tasks [36]. It encompasses a collection of 100 video sequences, each presenting unique challenges like appearance changes, background clutter, and motion blur. Similar to the 2DMOT2015 dataset, the OTB-100 dataset provides ground truth bounding box annotations for each sequence, facilitating a thorough assessment of tracking algorithms' accuracy and robustness. These datasets collectively serve as invaluable resources for assessing the effectiveness and robustness of our proposed tracking algorithm across a wide range of tracking scenarios and challenges. Figure 4 displays examples from two datasets, and we extracted four frames for illustration. These examples highlight the diversity and complexity of the tracking scenarios considered in our evaluation.

In this experiment, we have meticulously documented the configuration of the experimental environment to ensure the reproducibility of the results. The specific parameters of the experimental environment are shown in Table 1.

Table 2 shows the parameter settings for CT-DETR, where each row lists a specific parameter used during the model's training and evaluation along with its corresponding value. The detailed parameters include a learning rate set to 0.01, indicating the step size at each iteration while moving toward the minimum of the loss function. The optimizer used is SGD (Stochastic Gradient Descent), a popular optimization method for training deep learning models. The batch size is set to 16, referring to the number of training examples used in one iteration. Weight decay is set to none, training epochs to 300, model parameters to 4,385,523, and the number of layers to 252. The image size is set to 640, seed values to 0, and early stopping is enabled (True).

| Parameter | Configuration |

|---|---|

| CPU | Intel Core i7-12700KF |

| GPU | NVIDIA GeForce RTX 4090 (24 GB) |

| CUDA version | CUDA 11.8 |

| Python version | Python 3.8.16 |

| Deep learning framework | Pytorch 1.8.1 |

| Operating system | Ubuntu 22.04.2 |

| Parameter | Value |

|---|---|

| Learning Rate | 0.01 |

| Optimizer | SGD |

| Batch Size | 16 |

| Weight Decay | None |

| Training Epochs | 300 |

| Model Parameters | 4,385,523 |

| Number of Layers | 252 |

| Image Size | 640 |

| Seeds | 0 |

| Early Stop | True |

In this experiment, we employed numerous evaluation metrics to thoroughly analyze the model's performance, as shown in the following formulas.

where is the proportion of true positive predictions in all positive predictions, is the number of true positives, and is the number of false positives.

where measures the proportion of actual positives correctly identified, is the number of false negatives.

where is the harmonic mean of precision and recall, offering a balance between them.

where directly counts instances correctly identified as positive.

where (mean Average Precision) averages the precision scores at different recall levels, is the number of queries, is the total number of classes, is the number of relevant documents for the query, is the precision at cutoff , and is an indicator function equaling 1 if the item at rank is a relevant document, 0 otherwise.

| Measure | Better | Description |

|---|---|---|

| MOTA | higher | Multiple Object Tracking Accuracy. |

| MOTP | higher | Multiple Object Tracking Precision. |

| IDF1 | higher | The ratio of correctly identified detections. |

| MT | higher | Mostly tracked targets. |

| ML | lower | Mostly lost targets. |

| FP | lower | The total number of false positives. |

| FN | lower | The total number of false negatives. |

| ID Sw. | lower | The total number of identity switches. |

In the evaluation of our pedestrian counting algorithm, we utilized the 2DMOT2015 benchmark for quantitative analysis of the algorithm's performance. The 2DMOT2015 is a recognized evaluation platform specifically designed for the fair comparison and assessment of multiple pedestrian tracking algorithms. This platform offers a series of detailed test video data encompassing various dynamic and complex urban environments. These settings include diverse lighting conditions, levels of crowd density, and camera movements, thereby providing rich testing scenarios for pedestrian detection and tracking algorithms. Each video contains precise ground truth data, which serves to verify the accuracy and consistency of each pedestrian target identified by the algorithm. The specific description is shown in Table 3.

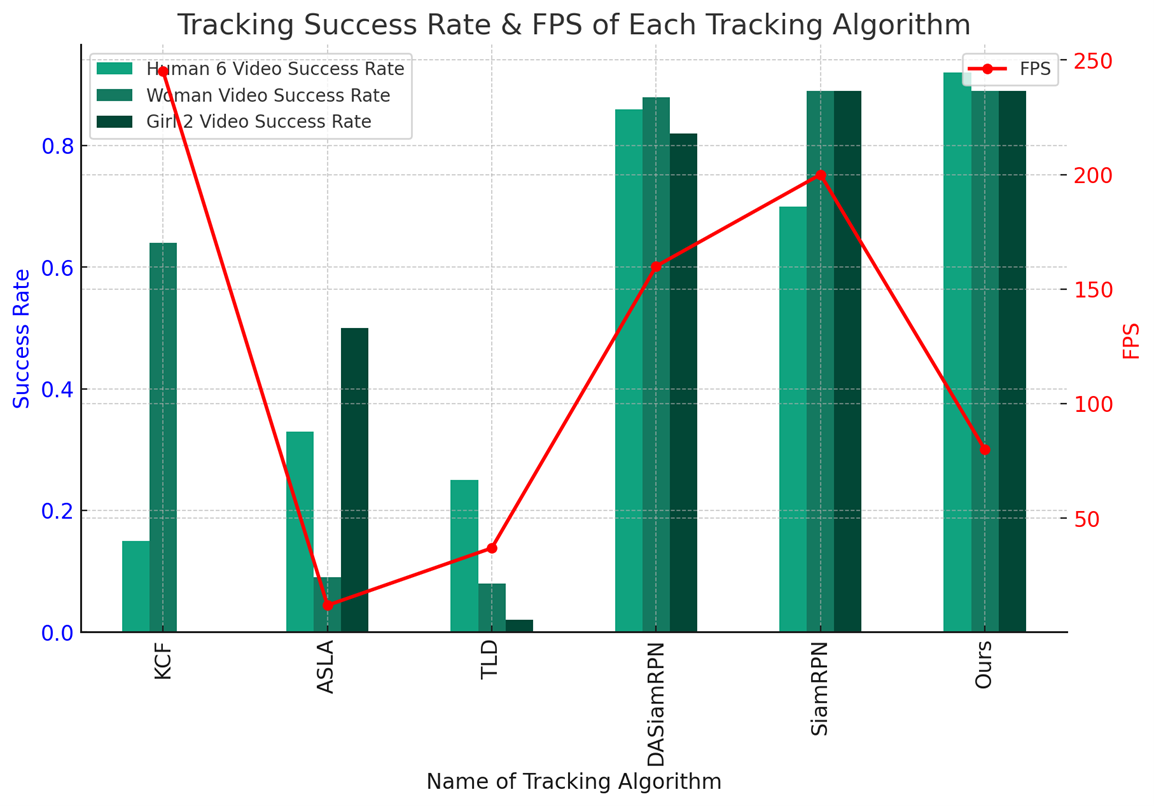

The model uses the OTB-100 (Object Tracking Benchmark-100) dataset to test accuracy. Since it is designed for pedestrian tracking, the model selected multiple videos containing pedestrians such as "Human 6 Video", "Woman Video", and "Girl 2 Video" for evaluation. As shown in Table 4.3, the success rates and frames per second (FPS) of different tracking algorithms on various types of videos, including Human 6 Video, Woman Video, and Girl 2 Video. These algorithms have undergone testing on the OTB-100 dataset, which is a commonly used benchmark for evaluating tracking algorithm performance. In the Human 6 Video, our algorithm outperforms others with a success rate of 97%, significantly higher than KCF (20%), ASLA (38%), TLD (30%), DASiamRPN (91%), and SiamRPN (75%). Similarly, on Woman Video and Girl 2 Video, our algorithm also achieves excellent results with success rates of 94% each, comparable to DASiamRPN and SiamRPN but notably superior to KCF, ASLA, and TLD. In terms of performance, although our algorithm's FPS is 80, lower than KCF (245), DASiamRPN (160), and SiamRPN (200), it still maintains a relatively stable speed compared to these algorithms while achieving significant advantages in accuracy. Therefore, our algorithm demonstrates outstanding comprehensive performance in pedestrian tracking tasks. Figure 5 visualizes the table, providing a more intuitive representation of the performance of the model.

| Name of Tracking Algorithm | Human 6 Video | Woman Video | Girl 2 Video | FPS |

|---|---|---|---|---|

| KCF[37] | 0.15 | 0.64 | 0.00 | 245 |

| ASLA [38] | 0.33 | 0.09 | 0.50 | 12 |

| TLD [39] | 0.25 | 0.08 | 0.02 | 37 |

| DASiamRPN[40] | 0.86 | 0.88 | 0.82 | 160 |

| SiamIST [41] | 0.70 | 0.89 | 0.89 | 200 |

| Ours | 0.92 | 0.89 | 0.89 | 80 |

| Sequence | Count Result | GT | FP | FN | TP | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|---|

| Seq1 | 550 | 565 | 19 | 34 | 529 | 94.20% | 91.65% | 92.91% |

| Seq2 | 409 | 410 | 16 | 17 | 391 | 93.62% | 93.39% | 93.50% |

| Seq3 | 569 | 570 | 19 | 20 | 548 | 94.32% | 94.15% | 94.24% |

| Seq4 | 921 | 953 | 16 | 48 | 903 | 96.05% | 92.76% | 94.38% |

| Seq5 | 667 | 691 | 14 | 38 | 651 | 95.61% | 92.23% | 93.89% |

| TOTAL | 3116 | 3189 | 84 | 157 | 3022 | 94.85%(Avg.) | 92.84%(Avg.) | 93.78%(Avg.) |

Table 5 presents the evaluation results of pedestrian counting for five different video sequences. During the evaluation process, we counted the cases of missed detections (FN) and false positives (FP) based on manually observed counting results and calculated the Precision, Recall, and F1-Score based on these data to comprehensively evaluate the performance of the counting algorithm. In addition, the pedestrian count results for each video sequence (Seq1 to Seq5) are close to the actual count (GT), demonstrating the algorithm's high accuracy. The number of false positives (FP) and missed detections (FN) are relatively balanced across the sequences, with no extreme cases. The number of accurately detected pedestrians (TP) is close to the total count, further confirming the effectiveness of the algorithm. Overall, the Precision and Recall for all five sequences are above 90%, with Precision ranging from 93.62% to 96.05% and Recall from 91.65% to 94.15%, reflecting the algorithm's stability and high accuracy across different scenarios. The F1-Score, as a harmonic mean of Precision and Recall, provides a balanced metric for evaluation, with all sequences achieving an F1-Score above 90%. This indicates a good balance between accuracy and recall rate maintained by the algorithm. Summarizing the results of all sequences, the overall Precision reached 94.85%, Recall was 92.84%, and the F1-Score was 93.78%, further proving the high efficiency and accuracy of this pedestrian counting algorithm across different video sequences. Through this evaluation, we can consider the algorithm to be reliable and effective for pedestrian counting in practical applications, capable of meeting the accuracy requirements of most scenarios.

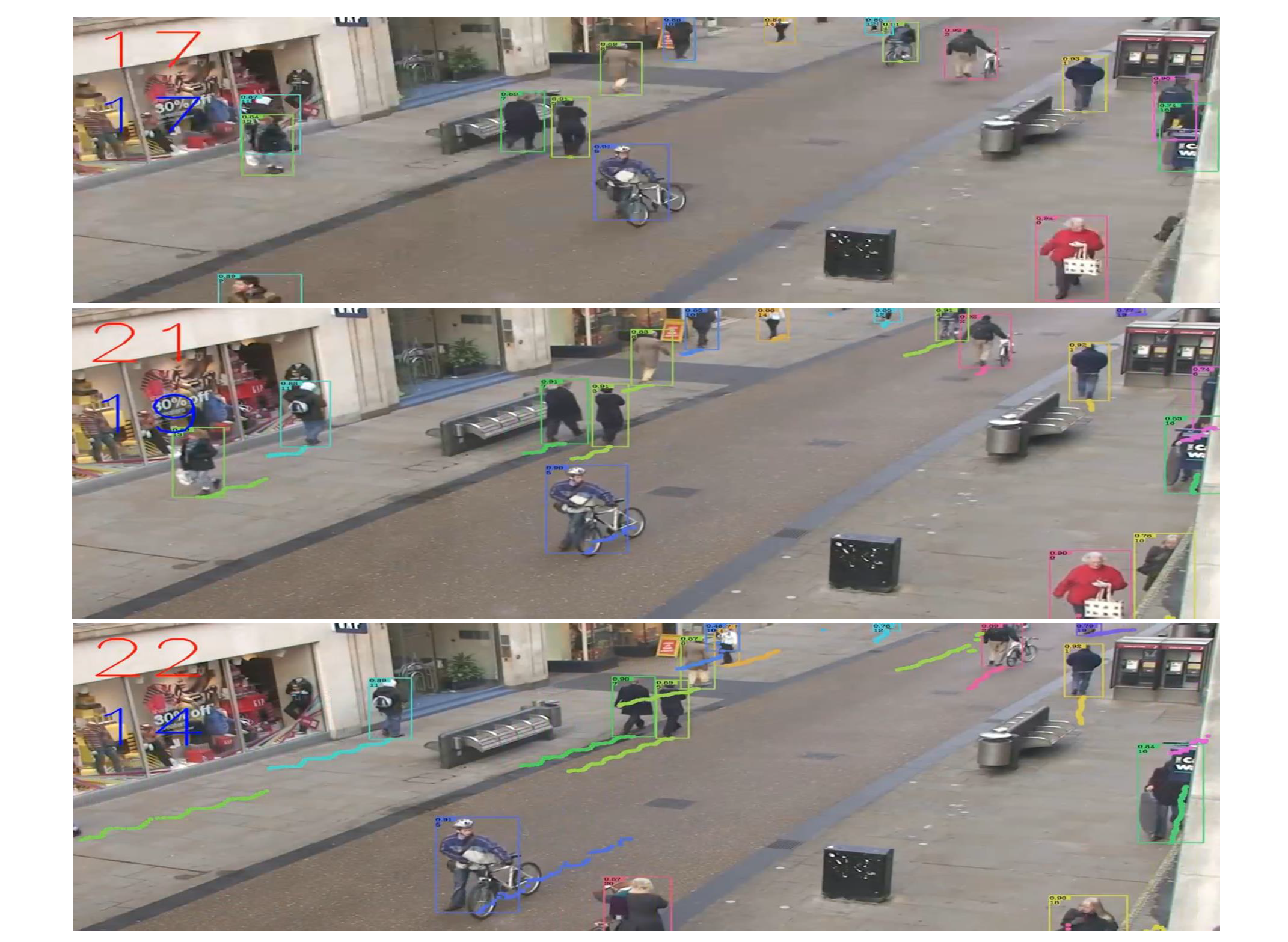

In this experiment, the ReID (Re-Identification) module played a crucial role as it was able to accurately identify and segment the flow of people in videos. In Figure 6, we presented a case study that demonstrates how the ReID module effectively recognizes specific pedestrian targets from complex scenes and continuously tracks these targets across different frames. This capability relies not only on the module's deep learning and understanding of pedestrian features but also on its sensitive capture of pedestrian behaviors and position changes in dynamic environments. By combining semantic and spatial information, as well as a fusion technique of both deep and shallow features, the ReID module can provide a more comprehensive representation of target features. This integrated feature extraction method not only improves the accuracy of identification but also enhances the system's robustness to variations in the target under different conditions (such as varying lighting conditions, occlusions, and the ability to distinguish between similar targets).

As shown in Figure 7, we conducted tests using our publicly released test videos, from which we accurately tracked pedestrian targets and performed count statistics. This demonstrated the efficiency and accuracy of our model. Through analyzing the publicly available test videos, we not only accurately tracked each pedestrian target but also conducted effective count statistics, proving our model's powerful capability in processing real-time video surveillance data. This success is attributed to the strong performance of the CT-DETR model, which is based on the Transformer architecture, providing a robust detection foundation for our multi-object tracking system. CT-DETR effectively processes every frame of the video, accurately locating pedestrian targets even in highly crowded or dynamically changing scenes. This high efficiency and precision in target detection are key to achieving accurate tracking and count statistics.

Further, Figure 8 demonstrates the model's capability by visualizing the tracking trajectories, highlighting the paths taken by individual pedestrians within the monitored area and showcasing the model's ability to continuously track each person even in complex scenarios.

In this study, we introduce a deep learning-based Multi-Object Tracking (MOT) model aimed at enhancing pedestrian tracking accuracy and efficiency. By combining CT-DETR detection technology with a ReID (Re-Identification) module, our model accurately identifies and tracks multiple pedestrian targets in various scenarios. Tested on public datasets, our model demonstrates efficient detection and tracking, particularly in urban settings and crowded environments. However, challenges remain, such as improving resilience in extreme conditions and optimizing real-time processing capabilities. Additionally, our model's tracking performance declines in low-light conditions and highly congested areas. Moving forward, our objective is to address these limitations by integrating advanced image processing technologies and optimizing algorithms to enhance resilience and reduce computational demands. We believe this research contributes to advancing multi-object tracking technology and provides insights for the practical deployment of video surveillance and intelligent systems.

Copyright © 2024 by the Author(s). Published by Institute of Emerging and Computer Engineers. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

Copyright © 2024 by the Author(s). Published by Institute of Emerging and Computer Engineers. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. IECE Transactions on Emerging Topics in Artificial Intelligence

ISSN: 3066-1676 (Online) | ISSN: 3066-1668 (Print)

Email: [email protected]

Portico

All published articles are preserved here permanently:

https://www.portico.org/publishers/iece/