IECE Transactions on Sensing, Communication, and Control

ISSN: 3065-7431 (Online) | ISSN: 3065-7423 (Print)

Email: [email protected]

The global agriculture industry is undergoing significant transformations driven by the rapid development of the Internet of Things (IoT) and advanced technological integration. IoT has enabled substantial improvements in agricultural efficiency, cost reductions, service accessibility, and operational management, even in remote or resource-limited regions [1]. Particularly in precision agriculture, IoT applications such as real-time monitoring, greenhouse automation, and predictive analytics have gained momentum, allowing for optimized and sustainable cultivation of diverse crops, including vineyards, bananas, olives, and corn [2]. However, despite the evident benefits, several challenges remain, especially related to network infrastructure, data management, and the adoption of open-source IoT technologies in agriculture [3].

The need for automation in agriculture has intensified due to rising global food demands, population growth, and urban migration, which have reduced available agricultural workforce and land area [4]. This scenario has propelled the agricultural sector towards increased reliance on artificial intelligence (AI) and robotic systems. Recent advancements in machine learning (ML), especially deep learning (DL), have significantly improved automation in tasks such as disease detection, weed-crop discrimination, fruit counting, and land cover classification [5]. Convolutional neural networks (CNNs), a particular DL architecture, have consistently demonstrated superior accuracy over traditional ML methods like Random Forest (RF) and Support Vector Machine (SVM) across various agricultural applications [6].

Despite these advancements, agricultural practices in developing countries remain heavily dependent on labor-intensive methods requiring continuous manual monitoring, highlighting a critical gap in technological adoption and automation capabilities. To address this gap, recent studies propose IoT-driven agricultural systems that automate critical processes based on environmental conditions, providing real-time feedback directly to farmers through smartphones and cloud platforms [7]. Moreover, the rise of IoT-enabled smart agriculture ecosystems, incorporating technologies like wireless sensor networks, big data analytics, and cloud computing, signifies a broader trend towards sustainable agricultural practices designed to address food security challenges arising from population growth, resource scarcity, and environmental unpredictability [8].

The urgency to mitigate the impacts of agricultural diseases, which substantially threaten global food production, further accentuates the need for technological solutions. Plant viruses alone account for significant economic losses and pose risks to environmental health, food security, and supply chain stability [9]. Consequently, developing autonomous, real-time disease detection systems employing advanced CNN models embedded in IoT-based robotic platforms presents a promising direction. These systems offer enhanced accuracy, operational efficiency, and scalability, making disease management practices more robust and sustainable, particularly for high-value and vulnerable crops like tomatoes [10].

Automated plant disease detection, particularly leaf disease identification, has increasingly emerged as an essential area of research due to its potential economic impact on agricultural productivity [11]. Techniques leveraging multispectral and hyperspectral imaging combined with advanced machine learning (ML) and deep learning (DL) algorithms, such as Convolutional Neural Networks (CNNs), ResNet, and VGG architectures, have proven effective in accurately identifying leaf diseases across various plant species. These computational methods typically outperform traditional ML classifiers, including Support Vector Machines (SVMs) and Random Forests (RF), offering significant accuracy improvements and demonstrating robust performance metrics like precision, recall, and F1-score [12].

Early and precise detection of crop diseases using DL methods has shown remarkable success in real-time agricultural applications. Notably, advanced CNN models, when integrated with powerful embedded hardware platforms have achieved impressive real-world classification accuracies, validating their suitability for field deployment [13]. Despite these technological advancements, several open issues persist, including model generalizability across diverse crops, computational efficiency for real-time inference, and the scarcity of comprehensive, publicly available datasets. Addressing these gaps requires datasets that encompass various crop types, disease classes, and environmental conditions to enhance the robustness and effectiveness of disease detection models [14].

In response to the necessity for more efficient computational performance suitable for edge computing environments, researchers have developed lightweight CNN architectures. For instance, the VGG-ICNN model has demonstrated outstanding results. Its significantly reduced number of parameters positions this architecture as an optimal solution for real-time disease detection tasks in resource-constrained agricultural environments [15]. Concurrently, advancements in agricultural autonomous navigation technologies, tailored for the complexity and unpredictability of farming environments, underline the importance of integrating precise, efficient navigation systems into automated agricultural equipment. Future research in this domain highlights key trends such as multi-dimensional perception, selective autonomous navigation technologies, multi-agent cooperative systems, and fault diagnostic capabilities, all essential to enhancing the practicality and reliability of autonomous agricultural vehicles [16].

IoT-based solutions offer promising approaches to tackling these challenges through innovative applications such as smart irrigation, precision farming, crop health monitoring, pest management, agricultural drones, and supply chain management [17]. Nevertheless, widespread adoption of IoT in agriculture necessitates addressing fundamental issues related to connectivity, scalability, data privacy, cost management, and enhancing awareness among stakeholders. Effective collaboration between farmers, technology providers, academia, and policymakers is crucial to unlocking the full potential of IoT-driven agricultural practices, ultimately contributing to sustainable agricultural productivity and resilience amidst global challenges such as climate change and resource scarcity [18].

| Component | Specification | Purpose |

|---|---|---|

| Raspberry Pi 4 Model B | ARM Cortex-A72 Quad-Core 1.5 GHz CPU, 4GB RAM, Bluetooth 5.0, Dual-band Wi-Fi (2.4 GHz/5 GHz) | CNN inference, image capturing, and cloud communication |

| ESP32 Microcontroller | Dual-core, 240 MHz, Integrated Wi-Fi and Bluetooth (BLE) | Motor control, sensor interfacing, obstacle avoidance |

| Web Camera | A4Tech 925HD, Resolution: 1920×1080 pixels, 30 fps | Capturing high-resolution leaf images |

| Ultrasonic Sensor | Waterproof, Detection range: up to 60 cm | Real-time obstacle detection |

| 8-Channel Relay Module | 8 separate relay switches, 5V DC operating voltage | Control and isolation of DC motors |

| DC Motors | 8 motors, Operating voltage: 12V DC | Robot mobility and navigation |

| Buck Converters | Input: 12V DC, Output: 5V DC, Max Current: 3A | Voltage regulation and power management |

| DC Battery | 12V, 7Ah rechargeable battery | Primary power source for field operations |

| Mechanical Frame and Tires | Custom-made frame, 8 robotic tires, and 2 shock absorbers | Robust navigation through agricultural fields |

This study proposes an autonomous, intelligent system leveraging CNNs integrated with IoT devices (a Raspberry Pi 4-powered unmanned ground vehicle, UGV) for real-time tomato disease detection. The CNN model is trained on a publicly available dataset consisting of over 20,000 images capturing ten common tomato diseases and healthy plants [19]. The developed system achieves robust disease classification accuracy and near real-time performance suitable for practical agricultural deployment, addressing the previously mentioned limitations of traditional methods.

The contributions of this work include:

Designing a low-cost Unmanned Ground Vehicle (UGV) specifically for tomato disease detection tasks, enabling accessibility and practical feasibility for small-scale farmers.

Developing a computationally efficient and robust Convolutional Neural Network (CNN) model capable of accurately identifying and classifying major tomato leaf diseases.

Integrating IoT and edge-computing technologies by deploying the developed CNN model onto a Raspberry Pi 4-based UGV, enabling near real-time disease identification and classification with minimal computational resources.

Successfully identifying and classifying major tomato diseases, thus demonstrating the system's potential applicability in precision agriculture.

The remainder of this paper is structured as follows: Section 2 presents detailed methodology and system architecture; Section 3 reports experimental results and discussions, followed by concluding remarks and suggestions for future work in Section 4.

This section details the systematic approach taken to develop, train, evaluate, and deploy the intelligent automated tomato disease detection system. The methodology includes Hardware Description and Integration, dataset description, CNN model architecture, model training, performance evaluation metrics, and system implementation.

A low-cost autonomous UGV was designed featuring a Raspberry Pi 4 as the computational core, an ESP32 microcontroller for motor and sensor control, ultrasonic distance sensors for obstacle avoidance, and a high-resolution web camera (A4Tech 925HD) for image capturing. Table 1 provides the summary of the key hardware components and their specifications.

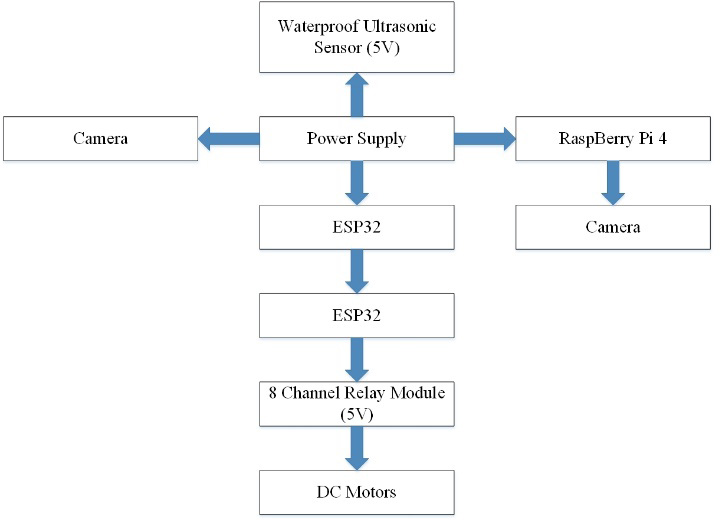

Figure 1 presents a block diagram showing the relationship between different hardware components. The block diagram depicts a robotic system integrating various sensors and control units. At the core, an ESP32 microcontroller connects to a relay that controls motors, facilitating motion. The ESP32 receives inputs from an ultrasonic sensor for distance measurement and two cameras for visual feedback. Power is supplied to the entire system, including the ESP32, cameras, and Raspberry Pi 4. The Raspberry Pi 4 processes additional camera data, enhancing visual and computational capabilities. This configuration allows the robot to navigate, capture real-time data, and interact with its environment through sensor feedback and motor control, ensuring precise operation.

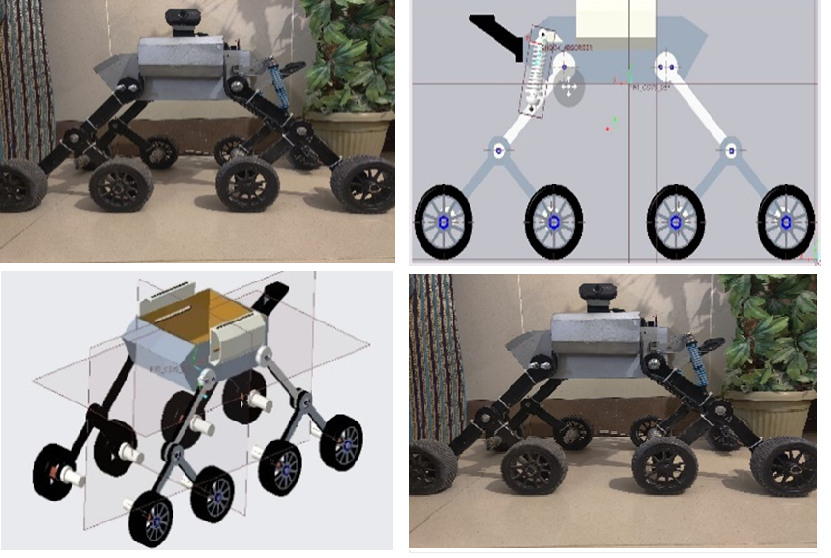

Figure 2 shows the detailed structural design of the Robot. The robot is meant especially for use in agriculture for tomato disease detection. Emphasizing stability and adaptability, the 8x8 robotic car design lets it negotiate easily in agricultural fields. Equipped with shock absorbers and dampers, the robot reduces the effect of uneven terrain, therefore enabling smooth mobility that protects the delicate onboard equipment vital for disease diagnosis. Small tires help the robot to be more maneuverable and to minimize soil compaction so it may pass exactly between rows of tomato plants.

The CNN model was trained and evaluated on a publicly available Tomato Leaf Disease Classification dataset, comprising over 20,000 labeled images across ten distinct tomato diseases and one healthy class: Late Blight, Early Blight, Septoria Leaf Spot, Tomato Yellow Leaf Curl Virus, Bacterial Spot, Target Spot, Tomato Mosaic Virus, Leaf Mold, Spider Mites Two-Spotted Spider Mite, Powdery Mildew, and Healthy Leaves. Images were captured from diverse environmental conditions, including both controlled laboratory settings and natural outdoor environments. Dataset images were resized to a uniform resolution of 128×128 pixels for optimal computational efficiency and consistency in training and inference.

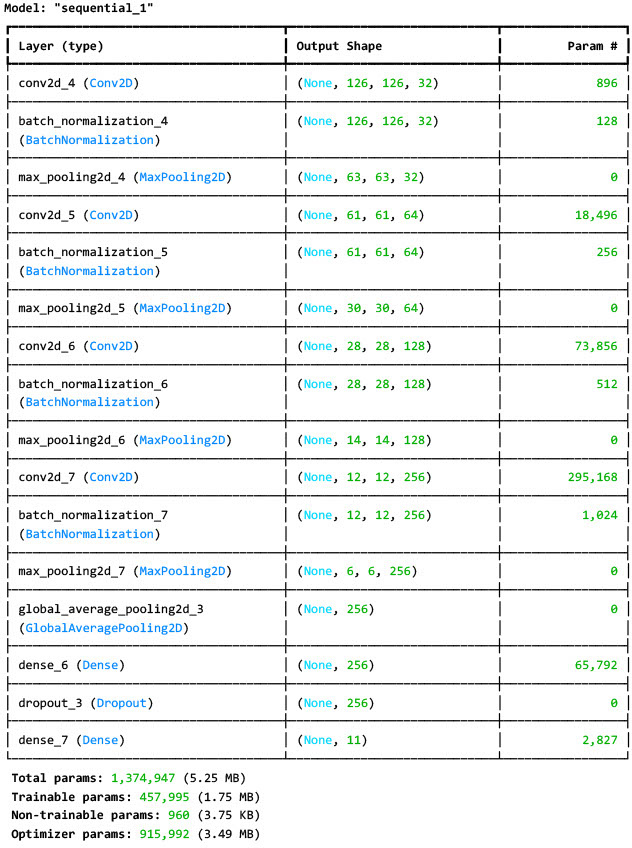

A lightweight CNN model architecture was developed for computational efficiency suitable for deployment on resource-constrained edge devices. The model consists of four convolutional layers with increasing filter depths (32, 64, 128, and 256 filters respectively), each followed by batch normalization and ReLU activation functions. Then, there are max pooling layers following convolutional blocks for dimensionality reduction. The global average pooling layer is used for minimizing overfitting and reducing model complexity. Fully connected dense layers are used with dropout regularization and final dense layer for multi-class classification into 11 categories using softmax activation. Figure 3 presents the detailed CNN model architecture summary, highlighting layer types, dimensions, and parameter count clearly.

The dataset was split into 80% training and 20% validation subsets. The CNN was trained using categorical cross-entropy loss, the Adam optimizer (learning rate 0.001), and trained over 15 epochs. Model performance was evaluated using comprehensive quantitative metrics, including accuracy, precision, recall, F1-score, and a confusion matrix for detailed class-wise analysis. The inference speed was benchmarked against MobileNetV2 to validate real-time performance.

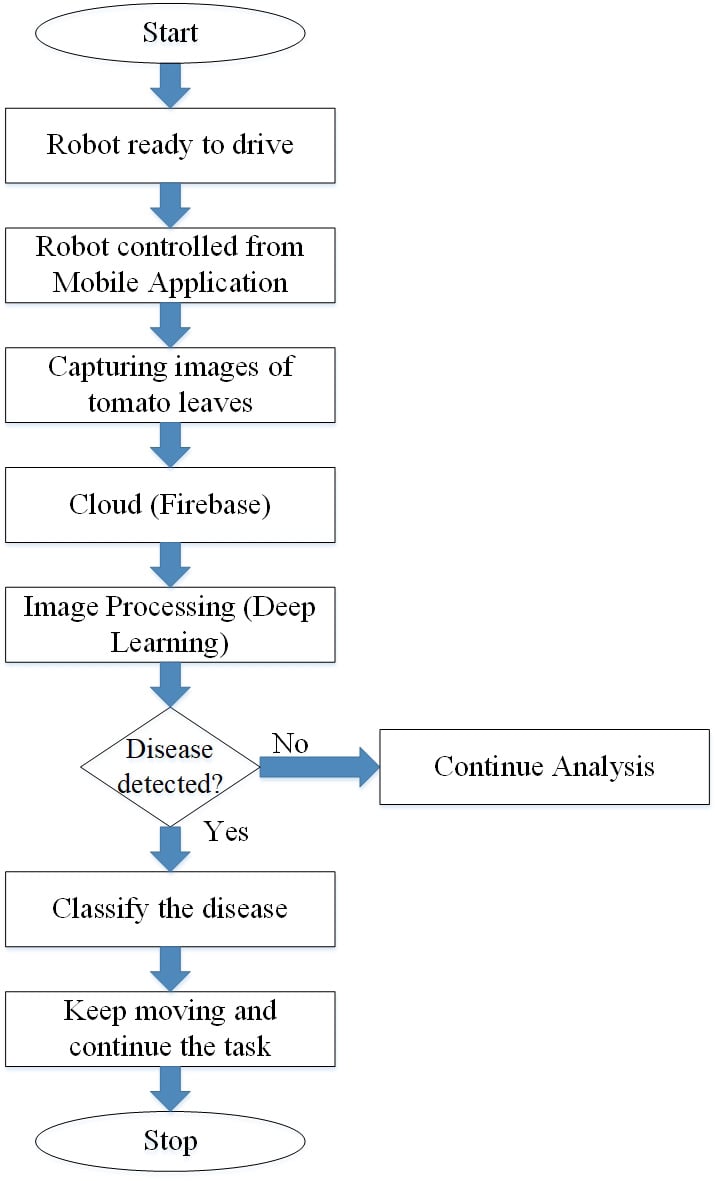

Figure 4 presents the operational workflow of the system. The system enables semi-autonomous navigation through agricultural fields, capturing high-resolution tomato leaf images, which are resized to 128×128 pixels onboard. A real-time CNN model performs disease classification onsite, with the results uploaded to Firebase cloud storage. These classification results are then displayed via user-friendly mobile or web interfaces, ensuring accessibility for end-users.

This section presents a detailed evaluation of the proposed intelligent automated tomato disease detection system, focusing on the developed CNN model's performance metrics and the robot's operational efficacy.

The proposed lightweight CNN model demonstrated strong classification capabilities, achieving an overall accuracy of approximately 83%. The performance was further comprehensively assessed using precision, recall, and F1-score, providing detailed class-wise insights into the model's effectiveness. Figure 5 shows the precision, recall, and F1-score for each class.

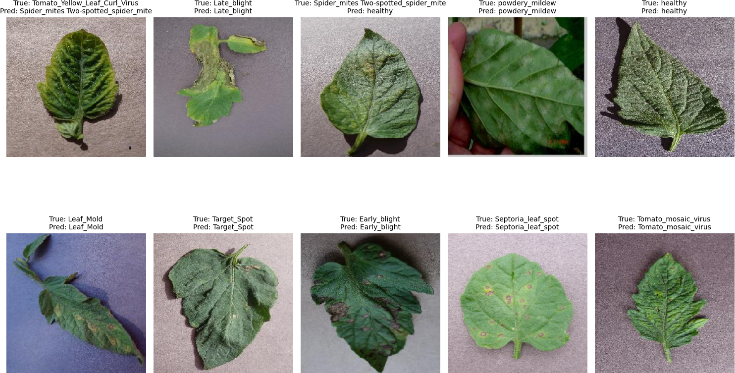

Specifically, the CNN model achieved precision exceeding 80% for diseases such as Bacterial Spot, Late Blight, and Tomato Yellow Leaf Curl Virus, demonstrating robust performance in distinguishing clearly visible diseases. However, relatively moderate performance was observed for diseases such as Spider Mites Two-Spotted Spider Mite and Target Spot, highlighting challenges in recognizing subtle visual symptoms. These results underscore the need for further dataset diversification and targeted model enhancements.

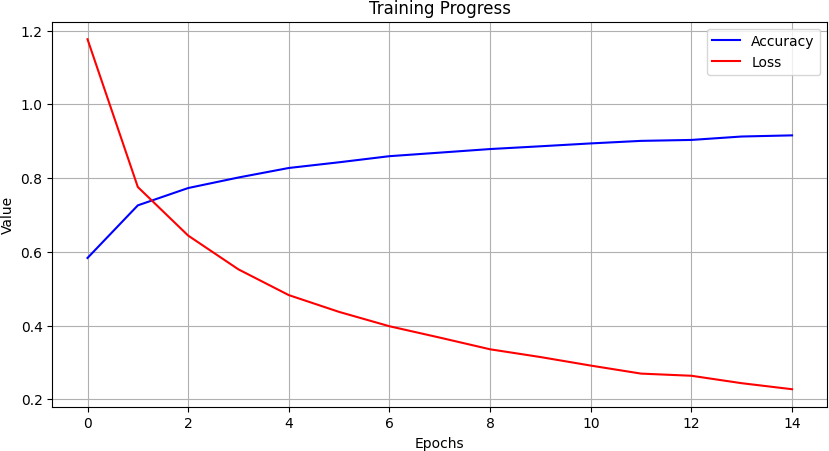

Figure 6 illustrates the training accuracy and loss curves over 15 epochs, showing stable convergence behavior. The model accuracy steadily increased, whereas the loss consistently decreased, indicating successful learning and minimal overfitting.

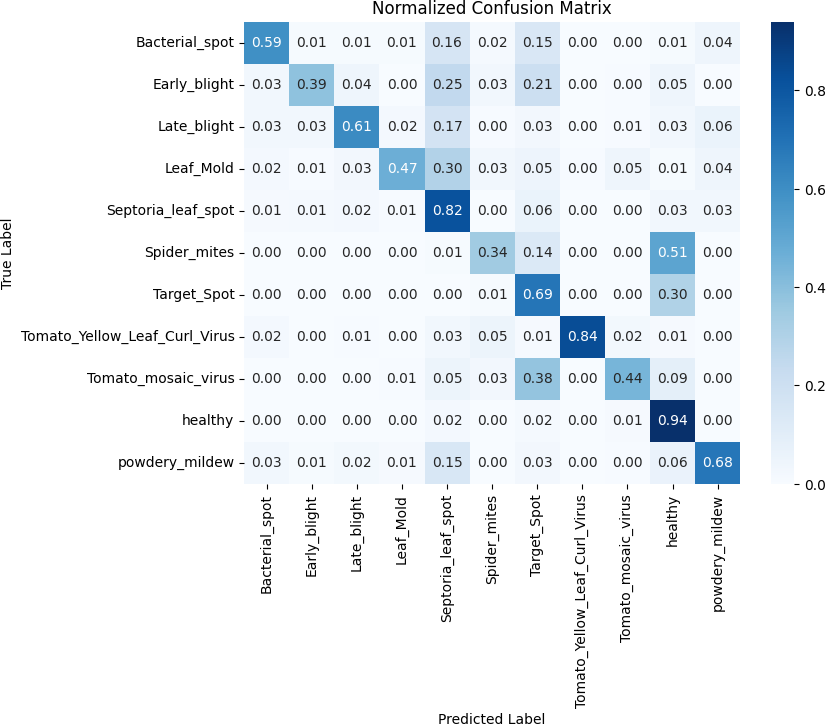

Figure 7 presents the confusion matrix. To gain deeper insights into model performance, a normalized confusion matrix was evaluated. It revealed robust class predictions for "healthy" leaves, with minimal misclassification. However, significant confusion was noted between visually similar disease classes (e.g., Leaf Mold and Target Spot), guiding areas for future improvement.

The real-time applicability was evaluated by measuring the inference time per image. The proposed lightweight CNN model exhibited an average inference time of 0.101 seconds per image, outperforming the MobileNetV2 benchmark, which recorded 0.111 seconds per image. Hence, the designed model demonstrated approximately 9% faster inference, confirming its suitability for near real-time field deployment.

Figure 8 presents sample visual classification outputs obtained from the CNN model. The model produced accurate classifications for visually distinct diseases, as shown, whereas subtle cases posed challenges, aligning closely with confusion matrix results.

The low-cost UGV platform demonstrated effective operational capabilities. Equipped with an 8-channel relay module for motor control and a waterproof Ultrasonic Sensor for obstacle detection, the robot navigated test environments smoothly, maintaining stable performance in controlled field trials.

The integration of energy-efficient hardware components, such as Raspberry Pi 4 and buck converters, significantly enhanced energy efficiency during robotic operation [20]. This design ensures lower energy consumption, enhancing system sustainability and economic viability for small-scale farmers.

Despite promising results, limitations exist, including occasional misclassifications between visually similar disease classes. Future improvements involve enhancing dataset diversity, optimizing CNN architecture further, and conducting more extensive field trials for improved reliability and generalizability.

This study presented an intelligent, automated tomato disease detection system combining a lightweight Convolutional Neural Network (CNN) with a low-cost IoT-based unmanned ground vehicle (UGV). The developed CNN model was trained on a publicly available dataset of over 20,000 tomato leaf images and successfully classified ten common tomato diseases and healthy leaves with an overall accuracy of approximately 83%. Comprehensive quantitative evaluations, including precision, recall, F1-score, and confusion matrix analysis, demonstrated strong performance for diseases with distinct visual features (precision exceeding 80%), while highlighting classification challenges among diseases exhibiting subtle symptom variations. Real-time applicability was demonstrated by achieving an average inference time of 0.101 seconds per image, outperforming the benchmark MobileNetV2 model by approximately 9%. The proposed low-cost UGV design, integrating an 8-channel relay module and waterproof ultrasonic sensors for reliable obstacle avoidance and autonomous navigation, further enhances practical deployment feasibility. Despite promising results, limitations, such as occasional misclassification of visually similar diseases, were identified. Future improvements will include enhancing dataset diversity, refining CNN architectures, and conducting more extensive field evaluations to enhance reliability and generalizability. Overall, the integration of deep learning and IoT technologies demonstrated here significantly contributes toward practical precision agriculture solutions, promoting sustainable disease management practices and enhancing economic outcomes in agriculture.

IECE Transactions on Sensing, Communication, and Control

ISSN: 3065-7431 (Online) | ISSN: 3065-7423 (Print)

Email: [email protected]

Portico

All published articles are preserved here permanently:

https://www.portico.org/publishers/iece/