Abstract

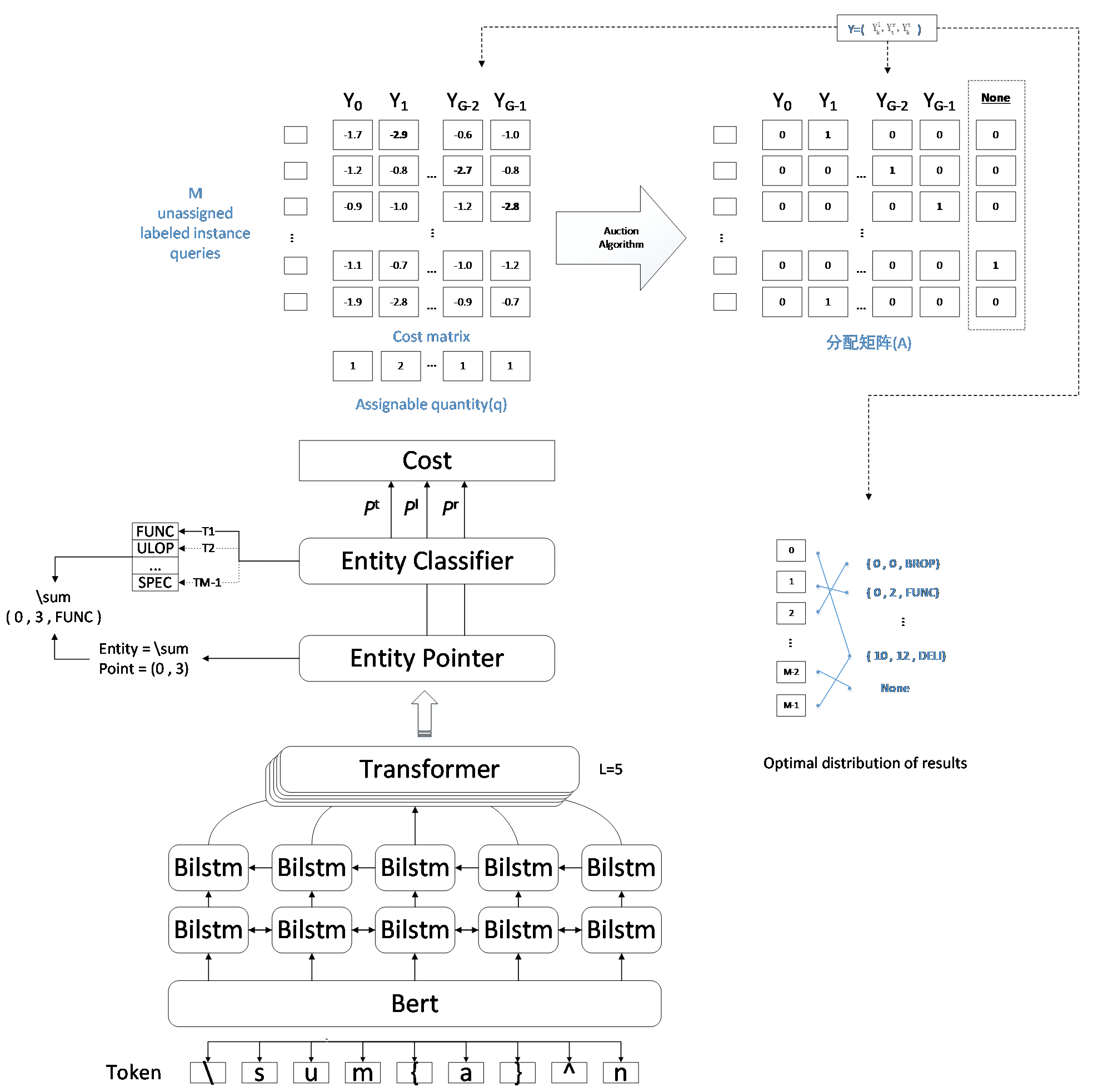

In the field of natural language processing, Named entity recognition (NER) is a essential task. Mathematical formulas usually contain a large number of terminologies, units of measure and other proprietary knowledge, and the integration of this information into the knowledge graph can significantly enhance the semantic expression ability of the graph. By identifying the named entities in data formulas, the key concepts, entities and relationships between them in the knowledge graph can be extracted, establishing basis for the construction of the knowledge graph and making it easier to interpret and analyse in practical applications. Furthermore, the structured knowledge derived from this process can facilitate personalized learning path recommendations by mapping identified entities to educational resources and prerequisite relationships. Aiming at the problem of insufficient recognition ability of existing models for mathematical formula entities, a mathematical formula named entity recognition method combining enhanced dynamic allocation of labels is proposed. A mathematical formula entity recognition model consisted of BERT(Bidirectional Encoder Representation from Transformer), BiLSTM(Bidirectional Long Short-term Memory) and Transformer was constructed, namely BERT-formula. The feature representation of deep semantic information is enhanced by adding extra sequences to the original vector representation for splicing at the model input; and the entity label prediction problem is regarded as a one-to-many linear allocation problem, and an auction algorithm is introduced to acquire the optimal allocation result with the smallest cost. Experiments demonstrate that the accuracy of the model prediction on the mathematical formula set is 98.8%, and the F1 value is 98.8%, which is improved by 1.51 and 1.05 percentage points compared with BERT-BiLSTM-CRF. It is evident that the approach performs well on the objective of identifying mathematical formula entities.

Keywords

named entity recognition (NER)

mathematics

bidirectional encoder representations from transformer (BERT)

deep learning

auction algorithm

Data Availability Statement

The source code is available at https://github.com/Ctrius/formula.

Funding

This work was supported in part by the Project of Construction and Support for high-level Innovative Teams of Beijing Municipal Institutions under Grant BPHR20220104; in part by the Beijing Scholars Program under Grant 099; in part by the IFLYTEK University Intelligent Teaching Innovation Research Special Project under Grant 2022XF055.

Conflicts of Interest

The authors declare no conflicts of interest.

Ethical Approval and Consent to Participate

Not applicable.

Cite This Article

APA Style

Liu, H., & Zhang, Q. (2025). Enhanced Dynamic Label Allocation for Mathematical Formula Named Entity Recognition in Learning Path Recommendations. Frontiers in Educational Innovation and Research, 1(1), 10–21. https://doi.org/10.62762/FEIR.2024.416675

Publisher's Note

IECE stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Copyright © 2025 by the Author(s). Published by Institute of Emerging and Computer Engineers. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (

https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

Submit Manuscript

Edit a Special Issue

Submit Manuscript

Edit a Special Issue

Copyright © 2025 by the Author(s). Published by Institute of Emerging and Computer Engineers. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

Copyright © 2025 by the Author(s). Published by Institute of Emerging and Computer Engineers. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.