IECE Transactions on Internet of Things

ISSN: 2996-9298 (Online)

Email: cjc@nwpu.edu.cn

Submit Manuscript

Edit a Special Issue

Submit Manuscript

Edit a Special Issue

[1]Musacchi, S., & Serra, S. (2018). Apple fruit quality: Overview on pre-harvest factors. Scientia Horticulturae, 234 , 409–430.

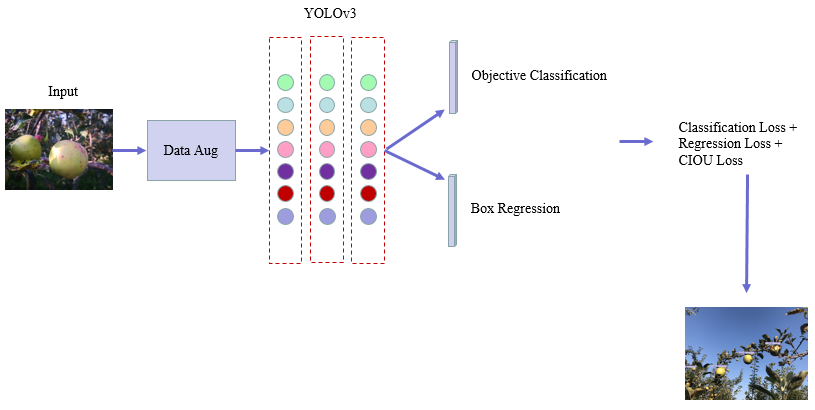

[2]Tian, Y., Yang, G., Wang, Z., Wang, H., Li, E., & Liang, Z. (2019). Apple detection during different growth stages in orchards using the improved yolo-v3 model. Computers and Electronics in Agriculture, 157, 417–426.

[3]Liu, Q., Cheng, L., Jia, A. L., & Liu, C. (2021). Deep reinforcement learning for communication flow control in wireless mesh networks. IEEE Network, 35(2), 112-119.

[4]Huang, Y., Cheng, L., Xue, L., Liu, C., Li, Y., Li, J., & Ward, T. (2021). Deep adversarial imitation reinforcement learning for QoS-aware cloud job scheduling. IEEE Systems Journal, 16(3), 4232-4242.

[5]Cheng, L., Wang, Y., Liu, Q., Epema, D. H., Liu, C., Mao, Y., & Murphy, J. (2021). Network-aware locality scheduling for distributed data operators in data centers. IEEE Transactions on Parallel and Distributed Systems, 32(6), 1494-1510.

[6]Cheng, F., Huang, Y., Tanpure, B., Sawalani, P., Cheng, L., & Liu, C. (2022). Cost-aware job scheduling for cloud instances using deep reinforcement learning. Cluster Computing, 1-13.

[7]Li, J., Tong, X., Liu, J., & Cheng, L. (2023). An Efficient Federated Learning System for Network Intrusion Detection. IEEE Systems Journal.

[8]Huang, H., Xue, X., Liu, C., Wang, Y., Luo, T., Cheng, L., ... & Li, X. (2023). Statistical Modeling of Soft Error Influence on Neural Networks. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems.

[9]Girshick, R., Donahue, J., Darrell, T., & Malik, J. (2014). Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 580–587). Columbus, OH, USA.

[10]Girshick, R. B. (2015). Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (pp. 1440–1448). Santiago, Chile: IEEE Computer Society.

[11]Ren, S., He, K., Girshick, R. B., & Sun, J. (2015). Faster R-CNN: towards real-time object detection with region proposal networks. arXiv.

[12]Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S., Fu, C.-Y., & Berg, A. C. (2016). Ssd: Single shot multibox detector. In Proceedings of the 14th European Conference (Vol. 9905, pp. 21–37). Amsterdam, The Netherlands.

[13]Redmon, J., Divvala, S. K., Girshick, R. B., & Farhadi, A. (2016). You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 779–788). Las Vegas, NV, USA: IEEE Computer Society.

[14]Redmon, J., & Farhadi, A. (2018). Yolov3: An incremental improvement. arXiv.

[15]Torrey, L., & Shavlik, J. (2010). Transfer learning. In Handbook of Research on Machine Learning Applications and Trends: Algorithms, Methods, and Techniques (pp. 242–264). IGI Global

[16]Zheng, Z., Wang, P., Liu, W., Li, J., Ye, R., & Ren, D. (2020). Distance-iou loss: Faster and better learning for bounding box regression. In Proceedings of the 34th AAAI Conference on Artificial Intelligence (pp. 12993–13000). New York, NY, USA: AAAI Press.

[17]Chen, J., Li, T., Zhang, Y., You, T., Lu, Y., Tiwari, P., & Kumar, N. (2023). Global-and-Local Attention-Based Reinforcement Learning for Cooperative Behaviour Control of Multiple UAVs. IEEE Transactions on Vehicular Technology.

IECE Transactions on Internet of Things

ISSN: 2996-9298 (Online)

Email: cjc@nwpu.edu.cn

Portico

All published articles are preserved here permanently:

https://www.portico.org/publishers/iece/