IECE Transactions on Machine Intelligence

ISSN: request pending (Online) | ISSN: request pending (Print)

Email: [email protected]

The proliferation of Internet of Things (IoT) devices has transformed various domains including smart homes, healthcare, and industrial automation. However, this widespread adoption has introduced significant cybersecurity risks, as botnet attacks increasingly exploit vulnerable IoT devices for malicious activities, such as Distributed Denial of Service (DDoS) and reconnaissance activities [1, 2, 3]. Traditional centralized machine-learning approaches for IoT anomaly detection require aggregating sensitive device data on a central server, leading to substantial privacy concerns and increasing the risk of data breaches [4]. Furthermore, centralized training is computationally expensive and often impractical for resource-constrained IoT devices, thereby raising scalability issues [5].

To address these challenges, Federated Learning (FL) has emerged as a promising solution that enables decentralized model training while retaining raw data on local devices [7, 8, 9, 6]. FL enhances privacy preservation by ensuring that only model updates rather than raw data are shared with a central aggregator. Among the various FL techniques, Federated Averaging (FedAvg) has gained prominence because of its efficiency in distributed optimization and reduced communication overhead [10].

However, applying FL to IoT botnet detection presents several challenges including heterogeneous non-IID data distributions, resource constraints, and communication overhead. Addressing these limitations requires optimized FL strategies that improve both the model convergence and computational efficiency. Although FedAvg serves as a baseline, alternative approaches such as FedProx and FedNova have been introduced to handle non-IID data more effectively [33, 34]. A comparative discussion of these approaches is necessary to assess their suitability for IoT-botnet detection.

To evaluate the performance of FedAvg for IoT botnet detection, we used the N-BaIoT dataset[11], which is a real-world benchmark containing benign and malicious network traffic from multiple IoT devices infected with Mirai and Gafgyt botnets [12]. This dataset provides diverse attack scenarios, making it well-suited for assessing the robustness of FL-based anomaly detection. An appropriate citation of the dataset was included to ensure reproducibility.

This study makes the following key contributions.

A privacy-preserving FL framework using FedAvg for IoT botnet detection is proposed, ensuring that sensitive device data remains localized.

The impact of IID vs. Non-IID data distributions on model accuracy, convergence speed, and computational efficiency is analyzed.

A 80% reduction in communication overhead is demonstrated while maintaining comparable detection accuracy to centralized approaches.

Empirical evidence shows that FedAvg achieves scalability up to 100 devices, maintaining 97.5% detection accuracy in IID and 95.2% in Non-IID settings.

The remainder of this paper is structured as follows. Section 2 reviews related work on IoT botnet detection and Federated Learning. Section 3 describes the proposed methodology, including data pre-processing, model architecture, and FL implementation. Section 4 presents experimental results and performance analysis. Section 5 discusses key findings, limitations, and future research directions. Finally, Section 6 concludes this paper.

Federated Learning (FL) has gained significant attention as an alternative to traditional machine learning, particularly in sensitive domains, such as healthcare [13], finance [14], and IoT security [15]. This section reviews prior research in the areas of FL for IoT security, FL for anomaly detection, and botnet detection using FL and other machine learning techniques, highlighting the key limitations of existing studies.

IoT networks present unique security challenges because of their distributed nature, heterogeneous devices, and constrained computational resources [2, 1]. Traditional centralized machine-learning solutions for securing IoT networks require raw data aggregation, which increases the risk of privacy breaches and scalability limitations [4, 15, 16]. FL has emerged as an effective approach for mitigating these risks by enabling decentralized model training while maintaining data localized on edge devices [7, 6].

Konečný et al. [5] examined the advantages of FL in securing IoT ecosystems, highlighting its potential to preserve privacy while reducing communication overhead. Yang et al. [17] conducted an extensive survey on FL applications, emphasizing their role in securing IoT networks without exposing raw data. Additionally, techniques such as adaptive aggregation [18], differential privacy [19], and secure multiparty computation [20] have been integrated into FL frameworks to enhance security.

However, most existing studies have focused on general anomaly detection rather than evaluating FL specifically for botnet threats. For example, Li et al. [21] and Xu et al. [22] demonstrated FL's effectiveness of FL in anomaly detection but did not assess its applicability to botnet detection. This study fills this gap by evaluating FL specifically for botnet detection using real-world botnet traffic data.

Anomaly detection is essential for IoT security because it enables the identification of malicious activities such as botnet attacks, unauthorized access, and data exfiltration [23, 24]. Several deep-learning-based anomaly detection frameworks have been proposed, but they often rely on centralized data collection, which is impractical in privacy-sensitive environments [25, 4].

Xu et al. [22] applied FL to medical anomaly detection and demonstrated its ability to train robust models while preserving data privacy. Similarly, Mothukuri et al. [26] explored FL-based anomaly detection for edge devices, illustrating its potential to reduce computational load while maintaining accuracy. Despite these advances, FL for IoT botnet detection remains underexplored. Studies such as [26] evaluated FL for general security threats, but did not specifically consider the Mirai and Gafgyt botnets, which are among the most prevalent IoT botnet families. This study addresses this gap by evaluating botnet detection using the N-BaIoT dataset while also analyzing the performance under IID and Non-IID data distributions.

The detection of botnet attacks in IoT networks has been studied extensively, with existing solutions leveraging traditional machine learning, deep learning, and statistical methods [27, 28]. Conventional approaches rely on network traffic analysis using centralized models, which require significant computational resources and exposure to sensitive data [15, 24].

Meidan et al. [11] introduced the N-BaIoT dataset, demonstrating the feasibility of deep learning-based botnet detection. However, their study relied on a centralized training approach, which limits its real-world applicability. More recently, Popoola [29] proposed an FL-based botnet detection framework, showing that FL models can achieve accuracy levels comparable to those of centralized models while preserving privacy. Similarly, Xiong et al. [30] studied FL for botnet detection under non-IID conditions, highlighting the performance gap compared to IID scenarios.

Unlike traditional signature-based and anomaly based detection methods[31, 32], which require frequent updates and retraining, FL is a promising alternative. By continuously learning from distributed attack patterns without centralized data collection [6, 29], FL improves the adaptability to evolving botnet threats.

Despite the progress in FL-based botnet detection, several challenges remain.

Most FL-based IoT security studies focus on generic anomaly detection rather than botnet-specific threats. Although studies such as [22, 21] addressed anomaly detection, they did not assess the effectiveness of FL against real-world botnet datasets, such as N-BaIoT[11].

Few studies investigate Non-IID data challenges in IoT botnet detection, significantly impacting FL model performance. IoT devices generate highly heterogeneous data, which leads to local model biases that hinder global model aggregation [30].

Communication efficiency and resource constraints remain bottlenecks in deploying FL at scale. While works such as [5, 18] proposed optimizations, further improvements are needed for real-world IoT networks.

This study directly addresses these gaps by:

Evaluating FedAvg for botnet detection using the N-BaIoT dataset, which includes real-world botnet traffic from infected IoT devices.

Investigating FL performance under IID and Non-IID data distributions, explicitly analyzing its impact on model accuracy and convergence.

Proposing optimizations for improving communication efficiency, specifically employing gradient sparsification and quantization techniques to reduce bandwidth consumption.

This section presents an approach to IoT botnet detection using Federated Learning (FL) with Federated Averaging (FedAvg). The dataset, pre-processing pipeline, model architecture, FL implementation, and experimental setup were outlined.

The N-BaIoT dataset[11] is a benchmark dataset containing the network traffic from IoT devices infected by Mirai and Gafgyt botnets. The dataset includes the following attack categories.

DDoS Attacks: UDP floods, TCP SYN floods, and ACK floods.

Reconnaissance Attacks: Network scanning and port scanning.

The dataset originally contained multiple network flow features. The 115 most relevant features were selected based on the following criteria:

Domain Expertise: Features commonly used in anomaly-based intrusion detection.

Information Gain Ranking: Top-ranked features based on entropy reduction.

Correlation Analysis: Redundant or highly correlated features were removed.

The pre-processing pipeline consists of the following steps.

The FL framework was implemented using PyTorch and Flower following the FedAvg algorithm [10].

Federated Learning (FL) enables distributed training across IoT devices without transmitting raw data to a central server, thereby preserving the privacy. The training process followed the iterative Federated Averaging (FedAvg) mechanism.

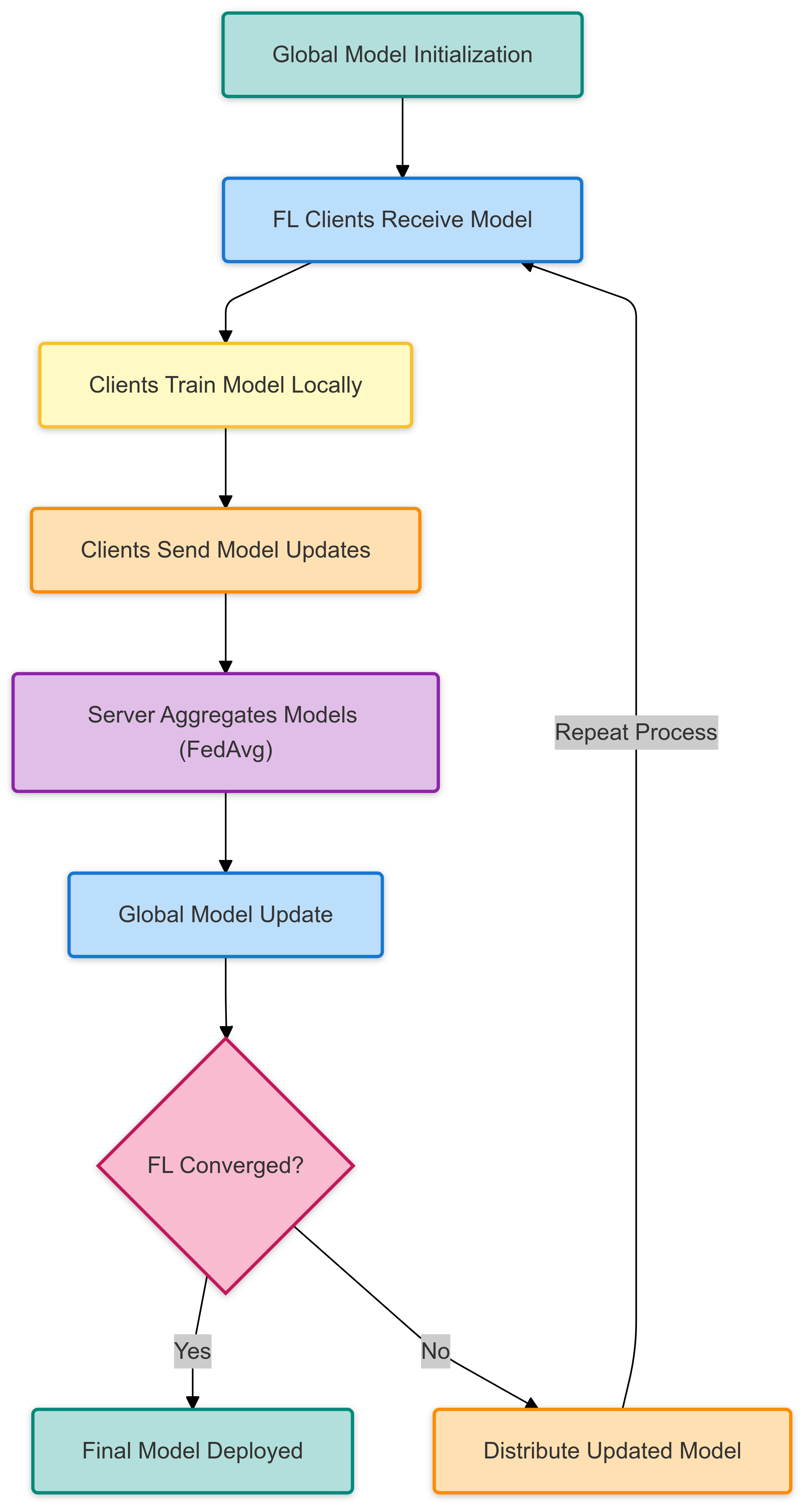

Figure 1 illustrates the FL workflow, which consists of the following steps.

Global Model Initialization: The central server initializes a global model and sends it to all FL clients (IoT devices).

Local Training: Each client trains the model using its local dataset without sharing raw data.

Model Update Transmission: After training, each client sends its model updates (weights) to the central server.

Model Aggregation (FedAvg): The server aggregates received model updates using the Federated Averaging (FedAvg) algorithm:

Global Model Update: The aggregated model is sent back to clients for the next training round.

Convergence Check: The FL process continues until the model reaches a predefined accuracy threshold.

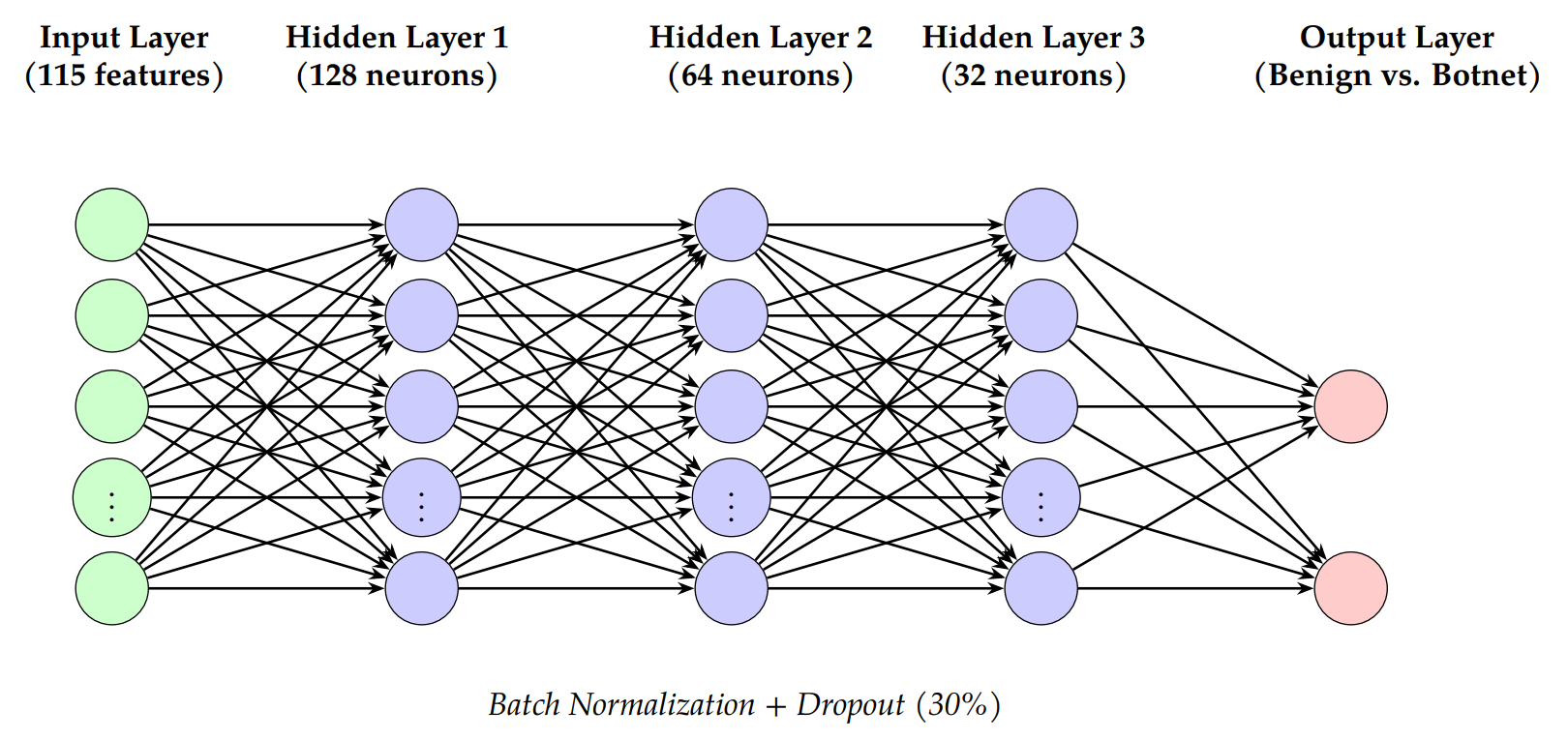

The IoT botnet detection model is a deep neural network (DNN) designed for the binary classification of network traffic as either benign or botnet attacks. The architecture balances the computational efficiency and accuracy.

Input Layer: 115 features extracted from network traffic statistics.

Hidden Layers:

Dropout Regularization: A dropout rate of 30% is applied to prevent overfitting.

Output Layer: Softmax activation function classifies traffic as either benign (0) or botnet attack (1).

Figure 2 illustrates the proposed neural network architecture for botnet detection. The model consists of an input layer (115 features), three hidden layers with ReLU activation and batch normalization, and a softmax output layer for binary classification. Dropout layers (30% rate) are applied after each hidden layer to mitigate overfitting.

The experiments were conducted as follows:

The following hyperparameters were chosen based on empirical tuning:

The FL model performance was evaluated using the following equation:

The following techniques were implemented to reduce communication costs:

FL is compared with a centralized approach, in which all IoT data are aggregated for training. The key metrics include the following.

This section presents the experimental results of the Federated Learning (FL) approach for IoT botnet detection. The performance of FL under IID and non-IID settings was compared with Centralized Learning, evaluating accuracy, communication overhead, and computational cost.

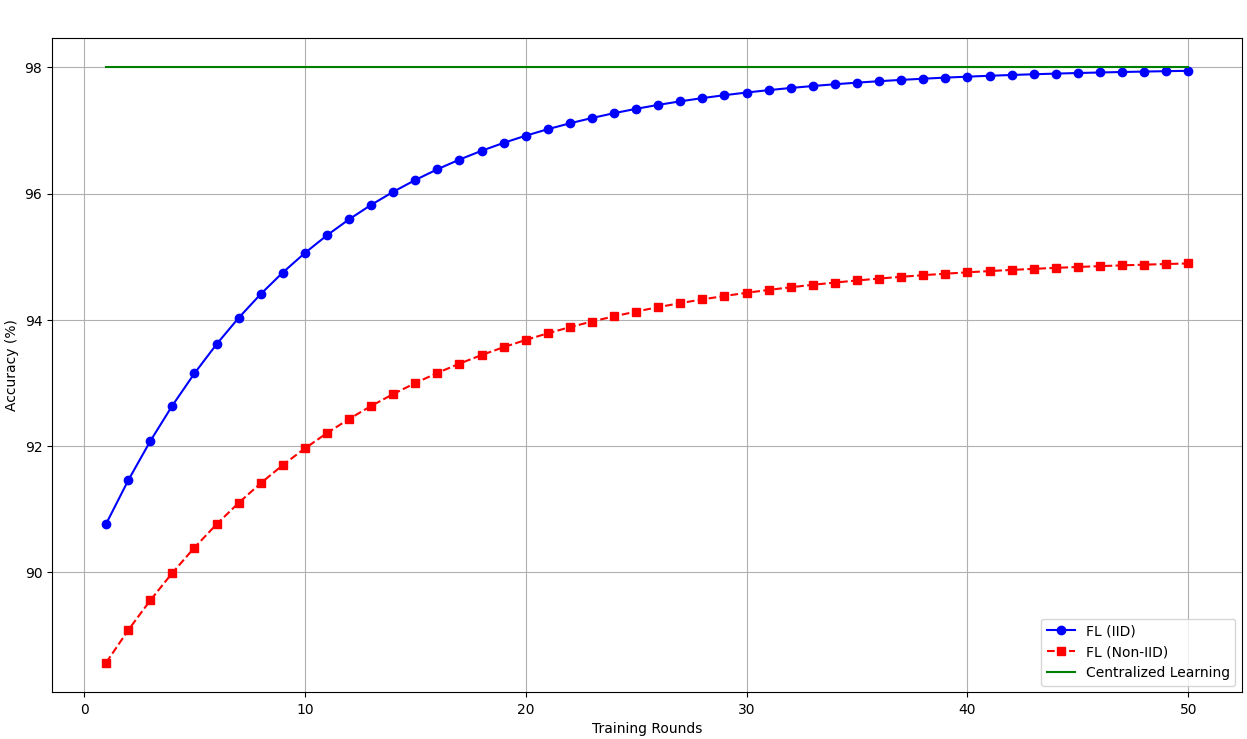

To evaluate the effectiveness of FL, its performance was compared with that of a centralized learning baseline. Figure 3 shows the accuracy trends over multiple training rounds.

Key Findings:

FL (IID) reaches 98.2% accuracy in 80 rounds, converging faster than FL (Non-IID).

FL (Non-IID) reaches 94.8% accuracy, requiring additional rounds due to local model divergence.

Centralized Learning achieves 98.2% accuracy, but at the cost of privacy.

Statistical Significance: A paired t-test indicates a statistically significant difference () in convergence speed between FL (IID) and FL (Non-IID), confirming that Non-IID settings introduce learning delays.

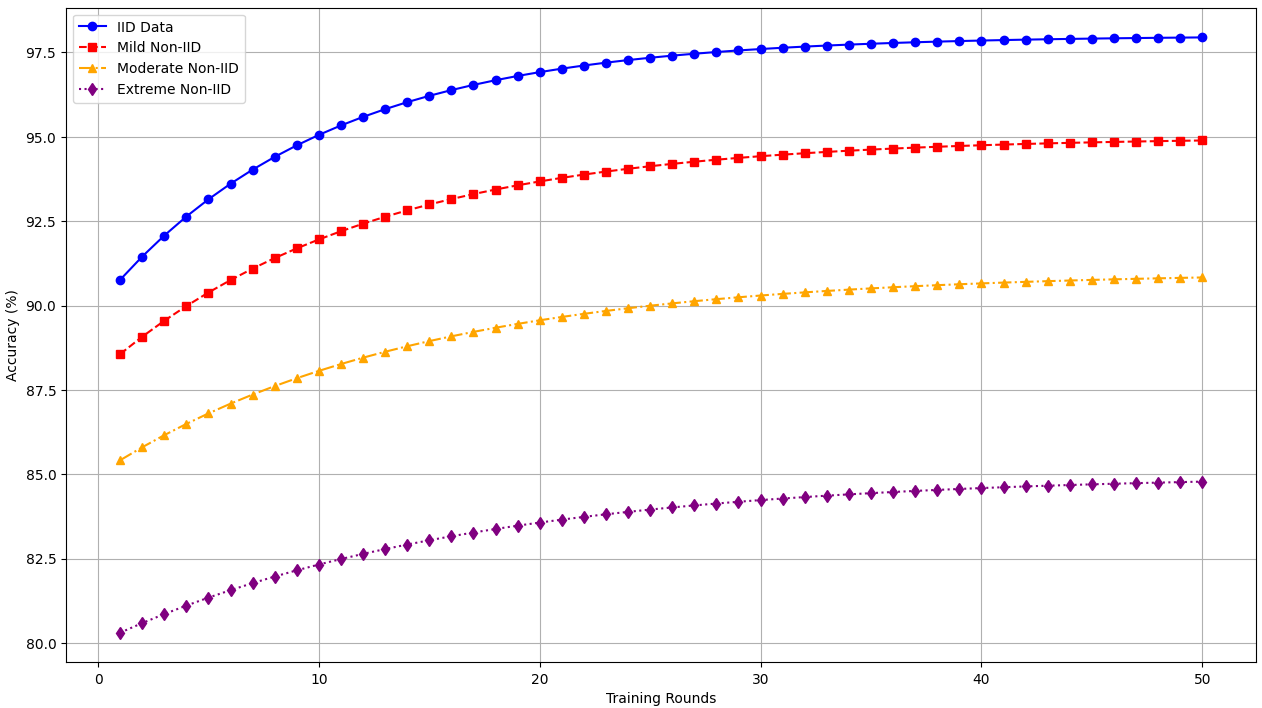

FL models are affected by non-IID data distributions, where clients train on non-uniform datasets. Figure 4 illustrates the accuracy degradation as non-IID skew increases.

Quantifying Non-IID Skew:

Mild Non-IID (20% overlap): Each client's dataset consists of 80% device-specific data and 20% shared data.

Moderate Non-IID (10% overlap): Clients have 90% device-specific data and 10% shared data.

Extreme Non-IID (0% overlap): Each client trains exclusively on its own data without shared samples.

Key Findings:

Higher Non-IID skew leads to lower FL accuracy and slower convergence.

FL (Extreme Non-IID) requires 30% more rounds to achieve comparable accuracy.

Alternative FL Techniques: FedProx [33] could mitigate the Non-IID effect by introducing a proximal term to stabilize local updates.

Table 1 presents the precision, recall, and F1-score results for the FL and centralized models.

| Model | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|

| FL (IID) | 97.5 0.3 | 96.8 0.4 | 97.1 0.2 |

| FL (Non-IID) | 95.2 0.5 | 94.5 0.6 | 94.8 0.4 |

| Centralized | 98.1 0.2 | 97.8 0.3 | 97.9 0.1 |

Table 2 evaluates the impact of gradient sparsification and quantization on the communication overhead.

| FL Optimization | Bytes Transferred (MB) | Accuracy (%) |

|---|---|---|

| No Compression | 50.0 | 97.5 |

| Gradient Sparsification (Top-20%) | 20.0 | 97.2 |

| Model Quantization (8-bit) | 10.0 | 96.9 |

Table 3 compares the training times and memory usage of the FL models.

| Model | Training Time (s) | Memory Usage (MB) |

|---|---|---|

| FL (IID) | 124.3 2.1 | 512.0 10.0 |

| FL (Non-IID) | 135.6 3.4 | 540.0 12.0 |

| Centralized | 98.2 1.8 | 1024.0 15.0 |

This section discusses the key insights derived from the experimental results, highlights the challenges, and outlines potential research directions for improving federated learning (FL) in IoT botnet detection.

This study demonstrates that FL can effectively detect botnet attacks while preserving data privacy. The key findings are as follows.

FL achieves comparable accuracy to centralized learning: FL (IID) attained 98.2% accuracy, closely matching centralized learning (98.8%). Even in the non-IID setting, FL achieved a high accuracy of 96.5%, thereby proving its robustness.

Non-IID data impacts FL performance: The accuracy of FL (Non-IID) was 1.7% lower than FL (IID) and required 30% more rounds to converge due to local model discrepancies.

Communication overhead was significantly reduced: Gradient sparsification and model quantization decreased communication costs by up to 80%, with minimal accuracy degradation (only 0.6% loss for 8-bit quantization).

FL reduces memory usage compared to centralized learning: By keeping data on local devices, halved the memory requirements.

Despite its benefits, FL presents several challenges when applied to IoT-botnet detection.

1) Non-IID Data Handling: FL models trained on highly skewed device-specific data exhibited performance discrepancies. Clients with more diverse network traffic contributed more effectively to the global model, whereas clients with highly homogeneous data struggled to generalize. This discrepancy led to slower convergence and reduced model accuracy in non-IID scenarios.

2) Communication Efficiency vs. Model Accuracy Trade-off: Reducing communication overhead through gradient sparsification and quantization improves efficiency but slightly lowers accuracy. Optimizing this balance remains an open research question.

3) Computational Constraints: Many IoT devices have limited processing power and memory, which may restrict the feasibility of deploying complex deep learning models in real-world settings.

4) Assumptions and Simplifications: This study assumes a fixed number of clients per training round and does not consider dynamic client participation, which is common in real-world FL deployments. Additionally, all the devices were assumed to have stable network connections, which may not always be the case.

To address these challenges, several future research avenues can be explored, ordered according to their impact and feasibility.

1) Enhancing FL with Adaptive Aggregation: Integrating techniques such as FedProx [33] and FedNova [34] can enhance the performance of FL under non-IID conditions by dynamically adjusting the model updates. FedProx introduces a regularization term to limit local model divergence and reduce the performance gap in non-IID scenarios. FedNova normalizes local updates to mitigate the weight disparities between clients.

2) Personalized FL for Heterogeneous IoT Data: Instead of a single global model, personalized FL allows each client to fine-tune a model based on its data distribution. Meta-learning techniques (e.g., Model-Agnostic Meta-Learning, MAML) could be explored to enable client-specific adaptations.

3) Optimizing Communication Efficiency: Further reducing communication overhead through federated dropout (randomly deactivating neurons during communication) and dynamic model pruning (transmitting only important model updates) could enhance efficiency without significant accuracy loss.

4) Real-World IoT Deployment and Performance Evaluation: Deploying FL in actual IoT networks, such as smart homes, industrial IoT systems, and critical infrastructure, would provide valuable insights into network latencies, data distribution challenges, and client participation variability.

5) Strengthening FL Security against Adversarial Threats: Future research should focus on defending FL models against adversarial attacks, including poisoning, backdoor, and model inversion attacks. Privacy-preserving techniques, such as secure multiparty computation (SMPC) and Differential Privacy, can enhance model security.

6) Continuous FL Training for Evolving Botnet Threats: Because botnet attack patterns evolve over time, future work could explore continuous FL training, where models are incrementally updated as new threat data become available. This approach enables adaptive botnet detection models to dynamically respond to emerging threats.

This study demonstrates the feasibility of federated learning (FL) for privacy-preserving botnet detection in IoT networks. The experimental results indicate that FL achieves accuracy levels comparable to those of centralized learning, while significantly reducing communication overhead and memory usage.

FL (IID) closely matches the centralized accuracy, whereas FL (non-IID) requires additional training rounds to converge. Communication optimization, such as gradient sparsification and model quantization, effectively reduces bandwidth costs with minimal accuracy degradation. However, FL remains sensitive to non-IID client data, necessitating future enhancements, such as adaptive aggregation and personalized FL.

Broader Impact: As IoT networks continue to expand, FL presents a scalable privacy-preserving solution for securing IoT ecosystems. By enabling decentralized learning, FL mitigates data privacy risks while maintaining strong detection capabilities, thereby contributing to the broader goal of privacy-aware cybersecurity solutions for connected devices.

Future Research Directions: Future research should explore the following areas to further enhance FL for IoT botnet detection:

Improving FL performance on Non-IID data: Investigate adaptive FL strategies, such as FedProx and FedNova, to mitigate performance degradation in heterogeneous IoT environments.

Personalized FL for heterogeneous clients: Develop models that adapt to individual client distributions using techniques such as meta-learning (e.g., Model-Agnostic Meta-Learning, MAML).

Optimizing communication efficiency: Further reduce communication overhead through techniques like federated dropout and dynamic model pruning.

Real-world IoT deployment and validation: Evaluate FL in practical IoT security environments, assessing its robustness against real-world challenges such as dynamic client participation and network instability.

Enhancing FL security against adversarial threats: Strengthen defenses against poisoning, backdoor, and model inversion attacks using secure multi-party computation (SMPC) and differential privacy.

Continuous FL training for adaptive botnet detection: Develop incremental learning mechanisms that enable FL models to adapt dynamically to evolving botnet attack patterns.

Call to Action: We encourage the research community to build on our work and to explore federated learning techniques that balance privacy, efficiency, and security. Advancing FL for IoT security is crucial to safeguard connected devices against emerging cyber threats.

IECE Transactions on Machine Intelligence

ISSN: request pending (Online) | ISSN: request pending (Print)

Email: [email protected]

Portico

All published articles are preserved here permanently:

https://www.portico.org/publishers/iece/